Approximation Algorithms: Packing Problems

This is the lecture notes from Chandra Chekuri's CS583 course on Approximation Algorithms. Chapter 4: Packing Problems.

You can read Chapter 5: Load Balancing and Bin Packing, here. Chapter 3: Knapsack, here.

Chapter 4

In the previous lecture we discussed the Knapsack problem. In this lecture we discuss other packing and independent set problems. We first discuss an abstract model of packing problems. Let

Example 4.1. Independent sets in graphs: Given a graph

Example 4.2. Matchings in graphs: Given a graph

Example 4.3. Matroids: A matroid

Example 4.4. Intersections of independence systems: given some

4.1 Maximum Independent Set Problem in Graphs

A basic graph optimization problem with many applications is the maximum (weighted) independent set problem (

Definition 4.1. Given an undirected graph

The

Theorem 4.2 (Håstad [1]). Unless

Remark 4.1. The maximum clique problem is to find the maximum cardinality clique in a given graph. It is approximation-equivalent to the

The theorem basically says the following: there are a class of graphs in which the maximum independent set size is either less than

The lower bound result suggests that one should focus on special cases, and several interesting positive results are known. First, we consider a simple greedy algorithm for the unweighted problem.

- While

is not empty do - A.Let

be a node of minimum degree in - B.

- C.Remove

and its neighbors from - Output

Theorem 4.3. Greedy outputs an independent set

Proof. We upper bound the number of nodes in

We now argue that

Exercise 4.1. Show that Greedy outputs an independent set of size at least

Remark 4.2. The well-known Turan's theorem shows via a clever argument that there is always an independent set of size

Remark 4.3. For the case of unweighted graphs one can obtain an approximation ratio of

Exercise 4.2. Consider the weigthed

LP Relaxation: One can formulate a simple linear-programming relaxation for the (weighted)

Although the above is a valid integer programming relaxation of

Claim 4.1.1. For any graph the optimum value of the above LP relaxation is at least

Simply set each

One can obtain a strengthened formulation below by observing that if

The above linear program has an exponential number of constraints, and it cannot be solved in polynomial time in general, but for some special cases of interest the above linear program can indeed be solved (or approximately solved) in polynomial time and leads to either exact algorithms or good approximation bounds.

Approximability of Vertex Cover and

Fact 4.1. In any graph

The above shows that if one of Vertex Cover or

Some special cases of

-

Interval graphs; these are intersection graphs of intervals on a line. An exact algorithm can be obtained via dynamic programming and one can solve more general versions via linear programming methods.

-

Note that a maximum (weight) matching in a graph

can be viewed as a maximum (weight) independent set in the line-graph of and can be solved exactly in polynomial time. This has been extended to what are known as claw-free graphs. -

Planar graphs and generalizations to bounded-genus graphs, and graphs that exclude a fixed minor. For such graphs one can obtain a

due to ideas originally from Brenda Baker. -

Geometric intersection graphs. For example, given

disks on the plane find a maximum number of disks that do not overlap. One could consider other (convex) shapes such as axis parallel rectangles, line segments, pseudo-disks etc. A number of results are known. For example a is known for disks in the plane. An -approximation for axis-parallel rectangles in the plane when the rectangles are weighted and an approximation for the unweighted case. For the unweighted case, very recently, Mitchell obtained a constant factor approximation!

4.1.1 Elimination Orders and MIS

We have seen that a simple Greedy algorithm gives a

For a vertex

Definition 4.4. An undirected graph

Graphs which are inductively

Exercise 4.3. Prove that the intersection graph of intervals is chordal.

Exercise 4.4. Prove that if

The preceding shows that planar graphs are inductively

Exercise 4.5. Prove that the Greedy algorithm that considers the vertices in the inductive

Interestingly one can obtain a

4.2 The efficacy of the Greedy algorithm for a class of Independence Families

The Greedy algorithm can be defined easily for an arbitrary independence system. It iteratively adds the best element to the current independent set while maintaining feasibility. Note that the implementation of the algorithm requires having an oracle to find the best element to add to a current independent set

- While (TRUE)

- A.Let

- B.If

break - C.

- D.

- Output

Exercise 4.6. Prove that the Greedy algorithm gives a

Remark 4.4. It is well-known that the Greedy algorithm gives an optimum solution when

It is easy to see that Greedy does poorly for

Definition 4.5. An independence system

The following theorem is not too difficult but not so obvious either.

Theorem 4.6. Greedy gives a

The above theorem generalizes and unifies several examples that we have seen so far including

Lemma 4.1. Suppose

We leave the proof of the above as an exercise.

4.3 Randomized Rounding with Alteration for Packing Problems

The purpose of this section to highlight a technique for rounding

Note that it is important to retain the constraint that

Suppose we solve the

Here we illustrate this via the interval problem. Without loss of generality we assume that

- Let

be an optimum fractional solution - Round each

to independently with probability . Let be rounded solution. - For

down to do - A.If

and ( is feasible) then - Output feasible solution

The algorithm consists of two phases. The first phase is a simple selection phase via independent randomized rounding. The second phase is deterministic and is a greedy pruning step in the reverse elimination order. To analyze the expected value of

By linearity of expectation,

Claim 4.3.1.

Via the independent randomized rounding in the algorithm.

Claim 4.3.2.

How do we analyze

at the point

We claim that

Using the claim,

This allows us to lower bound the expected weight of the solution output by the algorithm, and yields a randomized

Claim 4.3.3.

Proof. We have

This type of rounding has applications to a variety of settings - see

4.4 Packing Integer Programs (PIPs)

We can express the Knapsack problem as the following integer program. We scaled the knapsack capacity to

More generally if have multiple linear constraints on the "items" we obtain the following integer program.

Definition 4.7. A packing integer program (

In some cases it is useful/natural to define the problem as

When

Definition 4.8. A PIP is

4.4.1 Randomized Rounding with Alteration for PIPs

We saw that randomized rounding gave an

Rounding for Knapsack: Consider the Knapsack problem. It is convenient to think of this in the context of

- Let

be an optimum fractional solution - Round each

to independently with probability . Let be rounded solution. - If

for exactly one big item - A.For each

set - Else If

or two or more big items are chosen in - A.For each

set - Output feasible solution

In words, the algorithm alters the rounded solution

The following claim is easy to verify.

Claim 4.4.1. The integer solution

Now let us analyze the probability of an item

Claim 4.4.2.

Proof. Let

By Markov's inequality,

Claim 4.4.3.

Proof. Since the size of each big item in

Lemma 4.2. Let

Proof. We consider the binary random variable

To lower bound

First consider a big item

Thus,

One can improve the above analysis to show that

Theorem 4.9. The randomized algorithm outputs a feasible solution of expected weight at least

Proof. The expected weight of the output is

where we used the previous lemma to lower bound

Rounding for

- Let

be an optimum fractional solution - Round each

to 1 independently with probability . Let be rounded solution. - For

to do - A.If

for exactly one 1. For each and set - B.Else If

or two or more items from are chosen in 1. For each set - Output feasible solution

The algorithm, after picking the random solution

Claim 4.4.4. The integer solution

Now let us analyze the probability of an item

Claim 4.4.5.

Claim 4.4.6.

Lemma 4.3. Let

Proof. We consider the binary random variable

We upper bound the probability

We used the fact that

The theorem below follows by using the above lemma and linearity of expectation to compare the expected weight of the output of the randomized algorithm with that of the fractional solution.

Theorem 4.10. The randomized algorithm outputs a feasible solution of expected weight at least

Larger width helps: We saw during the discussion on the Knapsack problem that if all items are small with respect to the capacity constraint then one can obtain better approximations. For

- Johan Hastad. “Clique is hard to approximate within n/sup 1-/spl epsiv”. In: Proceedings of 37th Conference on Foundations of Computer Science. IEEE. 1996, pp. 627–636. ↩︎

- Moran Feldman, Joseph Seffi Naor, Roy Schwartz, and Justin Ward. “Improved approximations for k-exchange systems”. In: European Symposium on Algorithms. Springer. 2011, pp. 784–798. ↩︎

- Julián Mestre. “Greedy in approximation algorithms”. In: European Symposium on Algorithms. Springer. 2006, pp. 528–539. ↩︎

- Nikhil Bansal, Nitish Korula, Viswanath Nagarajan, and Aravind Srinivasan. “Solving packing integer programs via randomized rounding with alterations”. In: Theory of Computing 8.1 (2012), pp. 533–565. ↩︎

Recommended for you

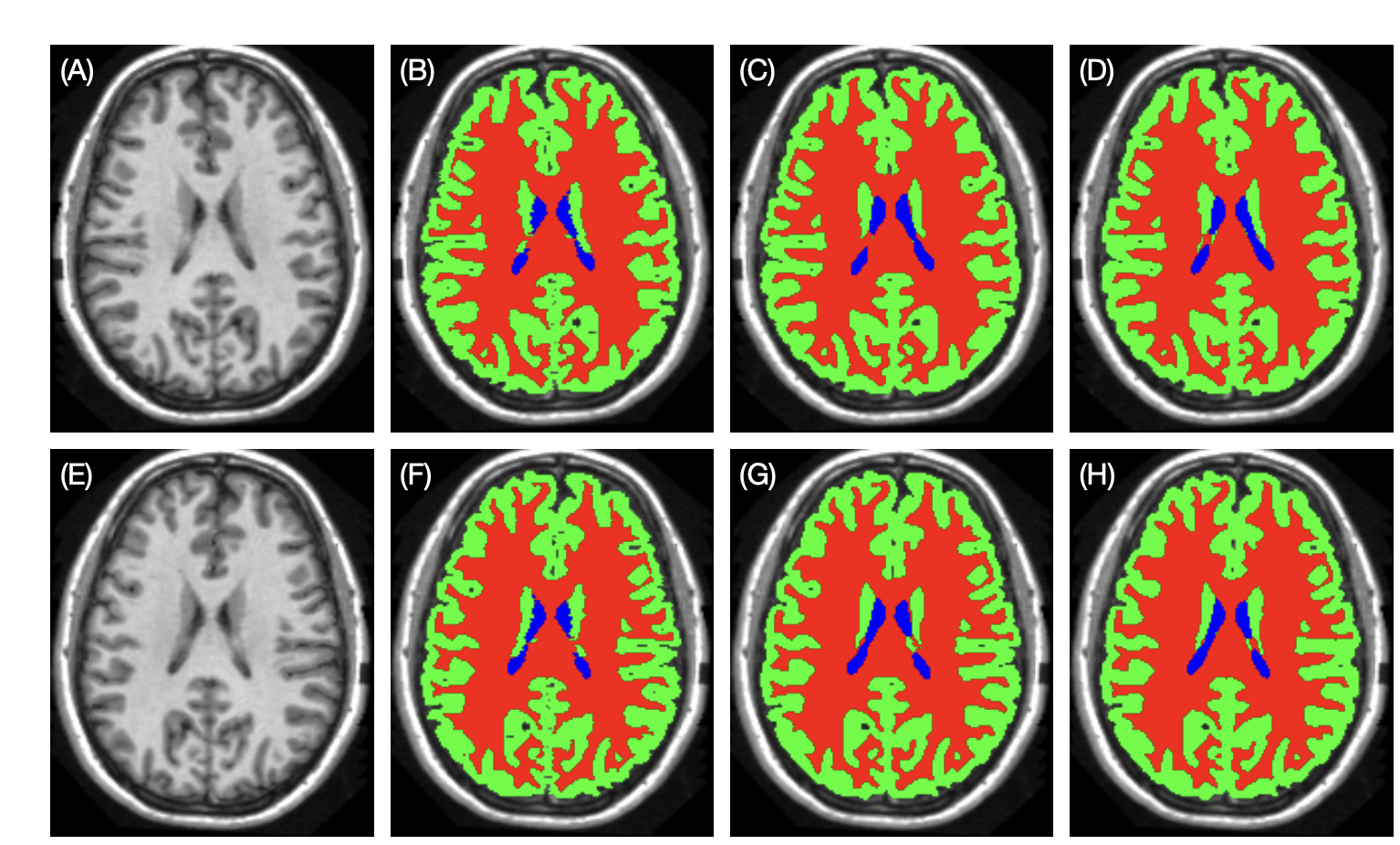

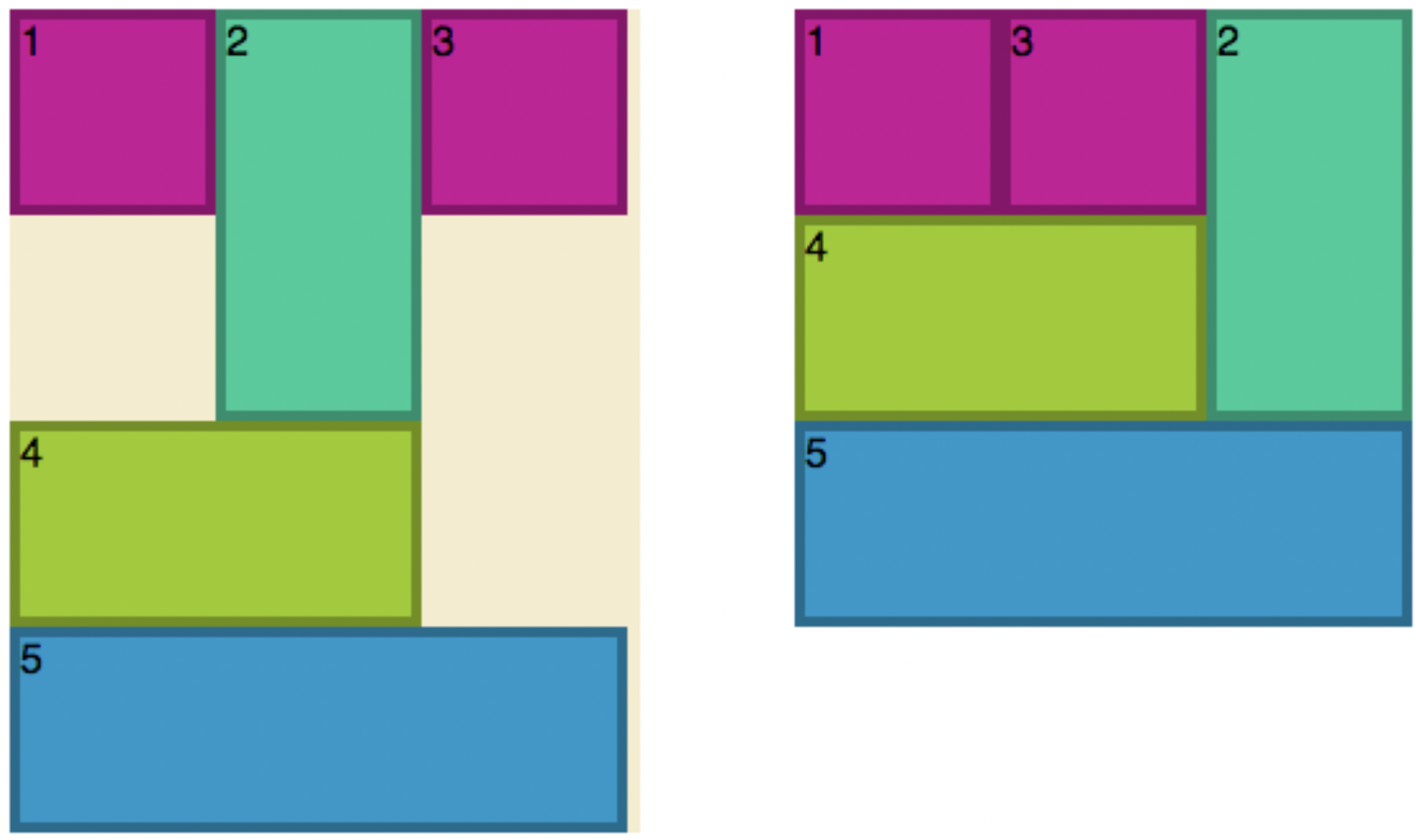

Group Equivariant Convolutional Networks in Medical Image Analysis

Group Equivariant Convolutional Networks in Medical Image Analysis

This is a brief review of G-CNNs' applications in medical image analysis, including fundamental knowledge of group equivariant convolutional networks, and applications in medical images' classification and segmentation.