High Dimension Data Analysis - A tutorial and review for Dimensionality Reduction Techniques

In the current day and age, high dimensional datasets are a common occurrence in most areas of study, with the high volume and variety of data that streams in. Research in the field of deep learning and machine learning have led to development of high efficiency models and algorithms that can deal with high volume of high dimensional datasets. However, there are techniques to engineer some significant variables and reduce the dimensionality by either eliminating other redundant variables or by creating new variables by combining a few existing ones. High dimensionality poses a few problems and hence need to be dealt with. Some of these issues are:

In this article, we investigate and study a few methods for selecting features from a high dimensional dataset, and thus reduce the dimensionality of the datasets.

1. Feature Selection from base features

Some techniques as described below are used to select features from the available base features in the dataset. These techniques do not alter the structure or definition of these variables, but simply select a subset of them.

1.1. Low Variance and High Correlation Filters

Statistically, variance is the measure of spread for a distribution of a random variable that determines the degree to which the values of a random variable differ from the expected value. In other words, it tells us how far the points are from the mean. The formula to variance is given by:

If the variance is closer to zero, that means the variable is fairly constant and does not spread too far away from the mean. A variable with variance 0 means all the values of the variable are constant i.e. all values are the same.

For a variable in the context of a dataset being prepared for machine learning, a variable with variance close to zero contains little to no information about the traget variable. This means it contains little impact on the target variable, and thus very little impact on the model's predictive ability.

Since variance is dependent on the range of the variable, it is important to scale the variables, especially numerical variables before computing the variance. After the variances have been computed for all the scaled variables, you can choose a threshold for the variance. All variables with variance below the theshold can be dropped.

For categorical variables, variables with few unique values but with more than 95% of the values belonging to a specific category can be dropped. For instance, if 95% of the records in a dataset belong to one particular country, say US, it is best to drop this variable as the information of the country would not improve or affect the performance of the model trained on this dataset.

Boolean features are Bernoulli random variables, and the variance of such variables is given by:

A similar feature-selection criteria can be made on the basis of the correlation of the existing features. Multiple features which are highly correlated among themeselves can lead to multicollinearity in a model. In models, especially regression models, if a feature is a linear function of 1 or more features in the dataset, it makes it difficult to estimate the relationship between each independent variable and the dependent variable independently. Thus, it is important to eliminate multicollinearity by retaining only one of the highly correlated features.

We use Pearson's correlation to find the correlation between numerical variables. To find correlations between categorical features, we can use the chi-squared test. The chi-squared statistical test considers 2 hypotheses:

H0 (Null Hypothesis) = The 2 variables to be compared are independent. H1 (Alternate Hypothesis) = The 2 variables are dependent.

Now, if the p-value obtained after conducting the test is less than 0.05, we reject the Null hypothesis and accept the Alternate hypothesis. If the p-value is greater that 0.05 we accept the Null hypothesis and reject the Alternate hypothesis i.e. it implies that the 2 variables are independent. A p-value of 0 would indicate that we can accept the alternate hypothesis with high confidence and that the two variables are highly correlated.

For determining the correlation between a categorical and a numerical variable, we can use correlation ratio (

1.2. Recursive Feature Elimination (RFE)

| Algorithm 1: Recursive Feature Elimination | |

| Tune/Train the model on the training set using predictors |

|

| Determine the appropriate number of features in the final model |

|

| While |

|

| Calculate the importance of the predictors | |

| Drop lowest |

|

| Tune/Train the model on the training set using the |

|

| [Optional] Calculate model performance | |

| end | |

| Train the model using the optimal |

|

Recursive Feature Elimination is wrapper-type feature selection technique. This means that a different machine learning algorithm is given and used in the core of the method, wrapped by RFE to help select features. Unlike filter-based feature selection methods that score each feature and select those features with the largest (or smallest) score, this method stops when it reaches a stopping criterion.

In RFE, we aim to select features by recursively considering smaller sets of features in each iteration. First, the estimator is trained on the initial set of features, often the entire set of predictors and the importance score of each feature is computed either through any specific attribute like feature importance, p-values, variable coefficients or a callable. Then, the least important features are pruned from current set of features, the model is re-built, and importance scores are computed again. That procedure is recursively repeated on the pruned set until the desired number of features to select is eventually reached.

One can specify the number of predictor subsets to evaluate as well as each subset’s size. Therefore, the variable subset size is a tuning parameter for RFE. The subset size that optimizes the performance criteria is used to select the predictors based on the importance rankings. The optimal subset is then used to train the final model.

One important thing to note, is that the RFE method cannot be used with all models. RFE requires that the initial model uses the full predictor set. Hence, RFE cannot be used with some models like multiple linear regression, logistic regression, and linear discriminant analysis, when the number of predictors exceeds the number of samples. To use RFE in these cases, the number of predictors must first be reduced.

1.3. Sequential Feature Selection (SFS)

Sequential Feature Selection or SFS is another wrapper-method for feature selection. SFS can be either forward or backward. You can specify the desired number of features. The feature selection algorithm removes or adds one feature at a time based on the model performance until a feature subset of the desired size

Forward-SFS is a greedy algorithm that iteratively finds the best new feature to add to the set of selected features. We initially start with zero features and find the one feature that maximizes a cross-validated score when an estimator is trained on this single feature. Once that first feature is selected, we repeat the procedure by adding a new feature to the set of selected features. The procedure stops when the desired number of selected features

Backward-SFS follows the same idea but works in the opposite direction: instead of starting with no feature and greedily adding features, we start with all the features and greedily remove features from the set. The direction parameter controls whether forward or backward SFS is used.

| Algorithm 2: Backward SFS | |

| Define the desired number of features | |

| Tune/Train the model on the training set using all predictors | |

| Compute Model Performance | |

| Calculate the importance of the predictors | |

| For each each subset of variables |

|

| Greedily drop the feature responsible for the worst model performance at the given point; retain |

|

| Tune/Train the model on the training set using the remaining |

|

| Calculate model performance | |

| end | |

| Calculate the performance profile over the final |

|

| Train the model using the optimal |

|

While the forward SFS algorithm is easier to understand, there are minor diferences between backward SFS and RFE. SFS does not require the underlying model to expose variable coefficients or importance scores. The iterative step makes a greedy decision on the basis of the model performance. This algorithm includes a model selection on the basis of various parameters like Adj RSquared, Mallow's Cp, BIC or AIC, Accuracy or even Root Mean Squared Error (RMSE), depending on the nature of the model.

SFS may however be slower considering that more models need to be evaluated, compared to RFE. For example in backward selection, the iteration going from

1.3.1. Definitions of some model-selection parameters

AIC: The Akaike information criterion (AIC) is an estimator of out-of-sample prediction error and thereby relative quality of statistical models for a given set of data. It can be defined as:

Here,

BIC: Bayesian Information Criteria (BIC) is a model selection parameter defined from Bayesian probability and inference. It can be defined as:

Here,

MDL: The Minimum Description Length, or MDL for short, is a method for scoring and selecting a model. It is an information theoretic measure of model selection. It represents the minimum number of bits, or the minimum of the sum of the number of bits required to represent the data and the model and is defined as:

Here,

We also aim to reduce MDL, and hence the model with the lowest MDL is selected.

1.4. Embedded Methods: L1 regularization for Feature Selection

Let us talk about the two most common regularization techniques:

L1 norm penalty:

Here,

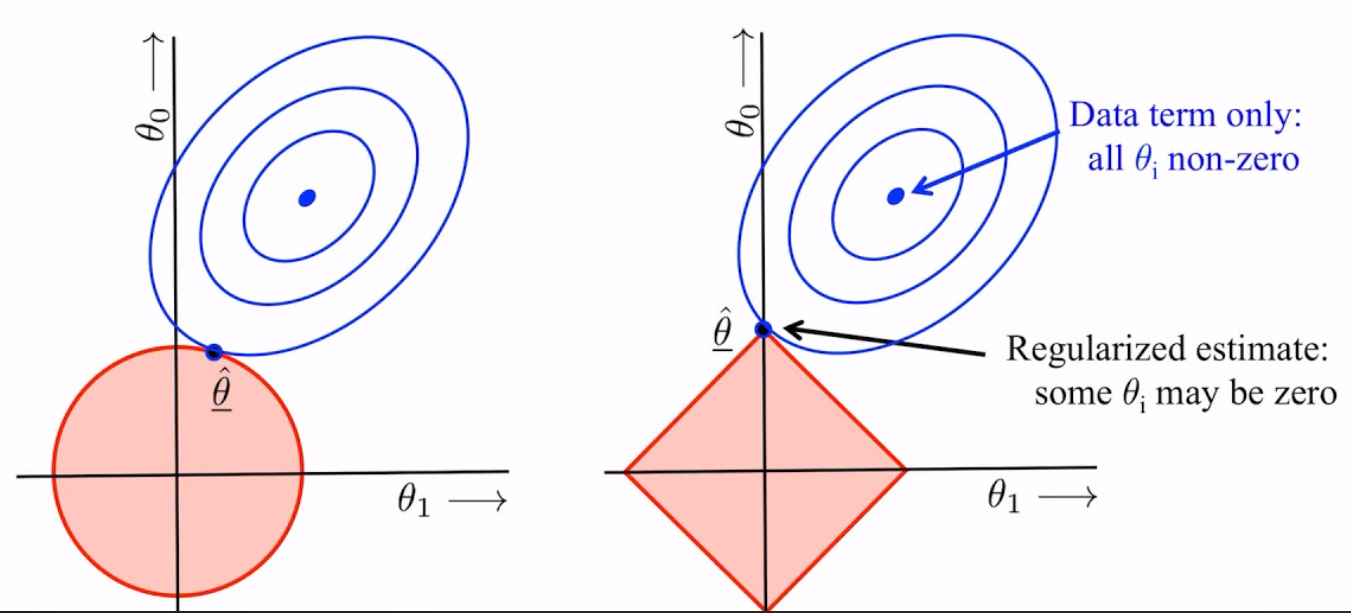

Graphical representation of L2 (left) and L1 (right) regularization

Graphical representation of L2 (left) and L1 (right) regularizationIn the above images, we see that

Thus, including a regularization term like L1-regularization with a machine learning model like linear regression to perform an embedded feature selection. Lasso regression uses L1 regularization on top of linear regression. It is like linear regression, but it uses a technique "shrinkage" where the coefficients of determination are shrunk towards zero.

1.5. Tree-based Feature Selection

Tree-based models can be used to compute impurity-based feature importances, which in turn can be used to discard irrelevant features. Let us consider the Random Forest models which computes importance scores for each feature based on the ‘Gini’ criterion during the training process. The Gini index is defined as follows:

Here, given a dataset

In other words, a lower Gini impurity index leads to a lower likelihood of misclassification. We can use impurity-based feature importances to discard irrelevant features using a meta-transformer for selecting features based on importance weights.

2. Feature Extraction using Transformation/ Combination of Features

There are a few methods that transform existing variables in the base dataset and/or combine them with other features to reduce the dimensionality while retaining maximum information/variance in the dataset.

These methods can be classifed as linear or non-linear methods. The linear methods involve linearly projecting the original data onto a low-dimensional space and extract the transformed features. Some of these methods involve Principal Component Analysis (PCA), Factor Analysis (FA), Linear Discriminant Analysis (LDA) and Truncated Singular Value Decomposition (Truncated SVD). We will also cover some non-linear methods which work better with non-linear data.Some of those methods are: Kernel PCA, t-distributed Stochastic Neighbor Embedding (t-SNE), Multidimensional Scaling (MDS), and Isometric mapping (Isomap)

2.1. Principal Component Analysis (PCA)

In a dataset with large number of variables, it can be difficult to study and interpret the relationships between these variables. There can be too many pairwise correlations between the variables to consider. Moreover, it is difficult to visualize these variables and relationships.

To interpret the data in a more meaningful form, it is necessary to reduce the number of variables to a few, interpretable linear combinations of the data. Each linear combination will correspond to a principal component. This is the essence of PCA.

Let us assume a random vector

If we consider the linear combinations as below:

Each of the above equations represent the

and the covariance between

Now, the coefficients can be represented as a vector:

Now, if we look at the first Principal Component:

subject to the constraint:

Similarly, the second principal component is the linear combination of x-variables that explains the maximum proportion of the remaining variation, with the constraint that the sum of the squared coefficients is equal to one and the additional constraint that the correlation between the first and second component is 0. It implies that the below variance is maximized:

given the first and second principal components are uncorrelated:

All subsequent principal components have this same property – they are linear combinations that account for as much of the remaining variation as possible and they are not correlated with the other principal components. Moreover, all principal components are uncorrelated with one another.

To find coefficients

The corresponding eigenvectors can be represented as

Since principal components are a combination of multiple variables, interpreting it can be tough. To do so, we can look at the correlation between the principal components and the individual variables. The direction and the magnitude of the correlation score with each of the variables tells us how each principal component can be interpreted. Say a particular principal component has a strong correlation of 0.95 with a variable

It is important to note that one should perform feature scaling before running PCA especially, if there is a significant difference in the scale between the features of the dataset. One can define the number of principal components to be extracted from a dataset. This could be defined using the proportion of the explained variance by each of these principal components.

2.2. Factor Analysis (FA)

Factor Analysis (FA) is a dimensionality reduction technique that also helps extract latent variables which are not directly measured in a single variable but rather inferred from other variables in the dataset. These latent variables are called factors. It models observed variables, and their covariance structure, in terms of a smaller number of these latent unobserved factors.

To understand the algorithm, let us start with a vector

This is a random vector, with a population mean. Assume that vector of traits

Here,

Now, we represent each variable as a regression function of these factors as follows:

Here, the variable means

Moreover, the error terms

Each of our response variables

The factor model has a few assumptions though:

- The specific factors or random errors all have mean zero.

- The common factors have variance one.

- The common factors are uncorrelated with each other.

- The specific factors are uncorrelated with each other.

- The specific factors are uncorrelated with the common factors.

We can interpret factor analysis as an inversion of principal components analysis. While PCA represents new components as a function of combination of observed variables, FA models the observed variables as linear functions of the new unobserved variables called factors. However, both PCA and FA reduce the dimension of the dataset.

2.3. Linear Discriminant Analysis

Linear Discriminant Analysis, or LDA, is a linear machine learning algorithm used for multi-class classification. It can also be used for dimensionality reduction.

Let us assume a dataset with

The aim of LDA is to:

Thus, LDA tries to separate (or discriminate) the samples in the training dataset by their class value. In this pursuit of dimensionality reduction, the model tries to find a linear combination of input variables that achieves the maximum separation for samples between classes (class centroids or means) and the minimum separation of samples within each class.

To explain this better, let us compare LDA to PCA. PCA is an unsupervised machine learning method, that is it takes into account only the spectral data and its variance to reduce the number of features. It does not require the labels of the data samples.

However, LDA makes use of the class labels to produce a dimensionality reduction that maximizes the distance between the classes. In other words, while PCA will find the projections that maximise the variance of the data irrespective of their classes, LDA will seek to maximise the distance between those classes while reducing the number of features.

In the above image, we see how LDA projects the existing features onto an exis such that it separates the two classes in the projected space. The general overview of this algorithm looks like the following:

| Algorithm 3: Linear Discriminant Analysis | |

| Compute the d-dimensional mean vector for the different classes from the dataset. | |

| Compute the Scatter matrix (in between class and within the class scatter matrix) | |

| Sort the Eigen Vector by decreasing Eigen Value and choosing |

|

| Used |

|

Although it looks like LDA can perform better than PCA in multi-class classification models, it is not always the case. The choice depends on the performance of the model built on the transformed dataset. One can also combine LDA and PCA as an ensemble to obtain transformed features.

2.4. Truncated Singular Value Decomposition (SVD)

The Singular-Value Decomposition, or SVD, is a matrix decomposition method for reducing a matrix to its constituent parts in order to make certain subsequent matrix calculations simpler. To understand Truncated SVD, let us briefly explain SVD.

SVD is a method for low-dimensional representation of a high-dimensional matrix. In this process, it also makes it easy to eliminate the less important parts of that representation to produce an approximate representation with any desired number of dimensions. Thus, it is a dimensionality reduction technique.

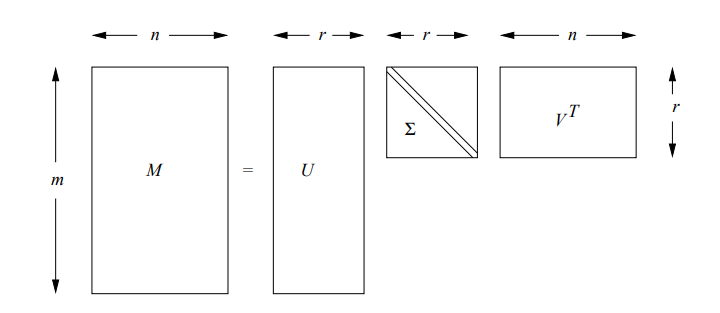

Let

Form of a singular-value decomposition

Form of a singular-value decompositionIt can be mathematically represented as:

Let us define the above matrices:

is an column-orthonormal matrix ; i.e., each of its columns is a unit vector and the dot product of any two columns is 0. is an column-orthonormal matrix. Note that we always use V in its transposed form, so it is the rows of that are orthonormal. is a diagonal matrix; that is, all elements not on the main diagonal are 0. The elements of are called the singular values of .

The key to understanding what SVD offers is in viewing the

In order to determine how many singular values need to be retained, it is a thumb-rule to retain enough singular values to make up 90% of the energy in

In truncated SVD, we take the

Contrary to PCA, this estimator does not center the data before computing the singular value decomposition. This means it can work with sparse matrices efficiently. Thus, we can used Truncated SVD as a dimensionality reduction technique for sparse datasets.

2.5. Kernel PCA

All the above methods are linear methods of dimensionality reduction. They work best for linear data. To be able to reduce the dimensions of non-linear data, we need non-linear methods. The first one discussed here is Kernel PCA. It is a non-linear version of PCA that uses kernels.

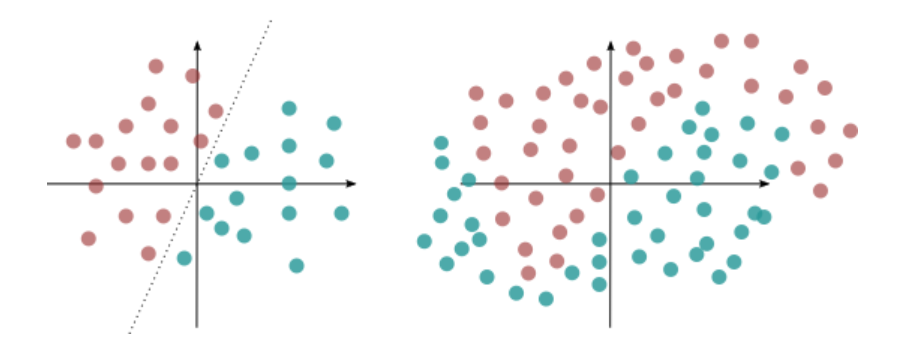

Linear and Non-linear dataset

Linear and Non-linear datasetIn the above figure, the data on the left is linearly separable. The one on the right is non-linear and needs a higher order polynomial to divide the complex boundary.

In the conventional PCA algorithm, the process of matrix decomposition into eigenvectors is a linear transformation. Kernel PCA can be used to reduce the dimensions of data during classification of data whose decision boundaries are described by non-linear function. The idea is to go to a higher dimension space in which the decision boundary becomes linear. Let's say the decision boundary of the above data distribution is defined by a third order polynomial

Thus the algorithm can be simplified as below:

| Algorithm 4: Kernel PCA | |

| Define the original set of |

|

| Define |

|

| Define the kernel function: compute the kernel function |

|

| Calculate the squared Euclidean distance between each pair of points in the dataset. | |

| Pass the distance matrix through the defined kernel to compute the kernel matrix for the given dataset. | |

| Calculate the eigenvalues and eigenvectors of the kernel matrix | |

| Flip eigenvectors' sign to enforce deterministic output | |

| Concatenate the eigenvectors corresponding to the highest |

|

One caveat in using Kernel PCA is that one has to define a value for the

2.6. t-distributed Stochastic Neighbor Embedding (t-SNE)

This is a widely used dimensionality reduction technique for data visualization, image processing and NLP. The algorithm can be described using these broad steps:

| Algorithm 5: t-SNE | |

| Compute the Euclidean distance matrix for all points from each other | |

| Transform the distances into conditional probabilities that represent the similarity between every two points | |

| Using the conditional probabilities, compute joint probability function: |

|

| Build a random dataset of points with the same number of points as in the original dataset, and |

|

| For this new dataset, create their joint probability distribution using the t-distribution (instead of Gaussian distribution) | |

| Ese the Kullback-Leiber (KL) divergence to make the joint probability distribution of the data points in the low dimension (new random dataset) as similar as possible to the one from the original dataset. | |

| The new transformation gives us a new dataset with reduced dimensions | |

We choose t-distribution instead of the Gaussian distribution because of the heavy tails property of the t-distribution. This leads to moderate distances between points in the high-dimensional space to become extreme in the low-dimensional space, and that helps prevent “crowding” of the points in the lower dimension.

We use KL-divergence as a measure of how much two distributions are different from one another. Lower the value of KL-divergence, more similar are the distributions. To find the new dataset with the reduced dimensions, we use the gradient descent optimization. The cost function that the gradient descent tries to minimize is the KL divergence of the joint probability distribution from the high-dimensional space and the low-dimensional space.

Another advantage of using t-distribution is that it helps improve the efficiency of the gradient-descent optimization process.

2.7. Multidimensional Scaling (MDS)

MDS is a non-linear dimensionality reduction technique that tries to preserve the distances between samples while reducing the dimensionality of non-linear data. It is also known as Principal Coordinates Analysis (PCoA), Torgerson Scaling or Torgerson–Gower scaling.

The main objective of MDS is to represent dissimilarities between data points as distances between points in a low dimensional space such that the distances correspond as closely as possible to the dissimilarities. The classical MDS algorithm, also called metric scaling can be described as below:

| Algorithm 6: Classical MDS | |

| Given a matrix |

|

| From matrix |

|

| Determine the largest eigenvalues |

|

| Get the square root of the dot product of the matrix of eigenvectors and the diagonal matrix of eigenvalues of |

|

This algorithm is now synonymous with Principal Coordinates Analysis. This is the metric MDS that deals with numerical distances, in which there is no measurement error (you have exactly one distance measure for each pair of items).

Non-metric MDS deals with non-numerical distances between items, in which there is no measurement error (you have exactly one distance measure for each pair of items). Thus, in non-metric Multidimensional Scaling, we compute a dissimilarity matrix instead of a distance matrix.

2.8. Isometric mapping (Isomap)

This non-linear technique of dimensionality reduction is an extension of MDS or Kernel PCA. This algorithms uses curved or geodesic distance to connect eah instance to its nearest neighbors and reduces dimensionality.

The broad steps of this algorithm can be described as below:

| Algorithm 7: Isomap | |

| Define |

|

| Use KNN-approach to find the |

|

| Construct the neighborhood graph where points are connected to each other if they are each other’s neighbors | |

| Compute the shortest path or the geodesic distance between each pair of data points using either Floyd-Warshall or Dijkstra’s algorithm | |

| Use MDS to compute lower-dimensional embedding | |

Use of MDS ensures that each object into the N-dimensional space (where

Comparison of linear Euclidean distance and Geodesic distance between two points on a non-linear data representation

Comparison of linear Euclidean distance and Geodesic distance between two points on a non-linear data representationThe use of the geodesic distance ensures that we do not misrepresent the distance between two points in a non-linear data distribution. IN the above image, if we look at points A and B, they appear to be much closer to each other if Euclidean distance is used to compute the distance between the two points. However, geodesic distances traces the path between the 2 points on the basis of the data distribution and is hence a more accurate representation of the distance between them. Use of a linear dimensionality reduction technique would hence, not be accurate.

3. AutoEncoder for Dimensionality Reduction

Autoencoders are artificial neural networks used for unsupervised learning. They are responsible for efficient coding or representation of data.

Architecture of a simple autoencoder

Architecture of a simple autoencoderThe above image shows the architecture of a simple autoencoder. It consists of an encoder-decoder network. Here, the encoder takes the input and transforms it into a compressed representation i.e. encoding, and then passes it to the decoder. The decoder, as the name suggests, seeks to reconstruct the original representation from the encoded data, as accurately as possible. The goal is to learn an encoded representation for a dataset. Looking at the image above, it follows a bottle-neck architecture, which indicates that the dimensions of the encoded output from the encoder i smaller than the original input.

This architecture forces the autoencoder to compress the training data’s informational content, and embed it into a low-dimensional space.

Like any other neural network, there is a lot of flexibility in how autoencoders can be constructed such as the number of hidden layers and the number of nodes in each, along with the activation function of eavh layer. With each hidden layer the network will attempt to find new structures in the data.

| Comparison of PCA and Autoencoder for dimensionality reduction | ||

| Parameter | PCA | Autoencoders (AE) |

| Data transformation | PCA follows linear transformation of data | Autoencoders can be either linear or non-linear on the basis of the activation function |

| Computational Efficiency | PCA is relatively faster | Autoencoders use Gradient descent optimization and is slower |

| Data Sample Size | PCA works best with smaller datasets | Autoencoders can be used for large datasets |

| Hyperparameter Tuning/ Input parameters | PCA need a hyperparameter |

Autoencoders have a complex architecture that needs to defined. This needs an activation function, number of dimensions in the encoding, number of nodes in each layer and number of layers in the network etc. |

Let us try to construct a simple Autoencoder for dimensionality reduction:

Simple autoencoder for dimensionality reduction from 30 inout features to 10 features

Simple autoencoder for dimensionality reduction from 30 inout features to 10 featuresThe above autoencoder (built using Keras in Python) seeks to represent an input dataset with 30 features as an encoded space with 10 features. The encoder and decoder and constructed separately (in most cases, the AE is symmetrical i.e. the encoder and decoder are complementary to each other). At the end, they are stitched together to create the AE. We then need to train the autoencoder i.e. fit it on our dataset. We can then extract the encoded space from the encoder step of the AE.

4. Additional Algorithms for Dimensionality Reduction

4.1. LCEM: Linear dimensionality reduction algorithm based on conditional entropy minimization.

LCEM algorithm

LCEM algorithmInformation Theory is a branch of computer science and mathematics that deals with transmission, storage and quantification of information bits. It is also widely used in the field of machine learning to design and optimize algorithms.

In 2010, Hino and Murata proposed a dimensionality reduction based on class conditional entropy minimization. To look at dimensionality reduction from an information theoretic method, let us define the Shannon Entropy of a random variable

The method described in this section, constructs a transformation

The minimization of the class conditional entropy will be done using gradient descent optimization. This means that we calculate the gradient vector of the class conditional entropies of the transformed data

Since the objective is to minimize the sum of the marginal entropies, a simple optimization for each marginal entropy may lead us to the same single transformation vector

This optimization process is repeated until the model converges. The result at the end of this process is a converged transformation matrix

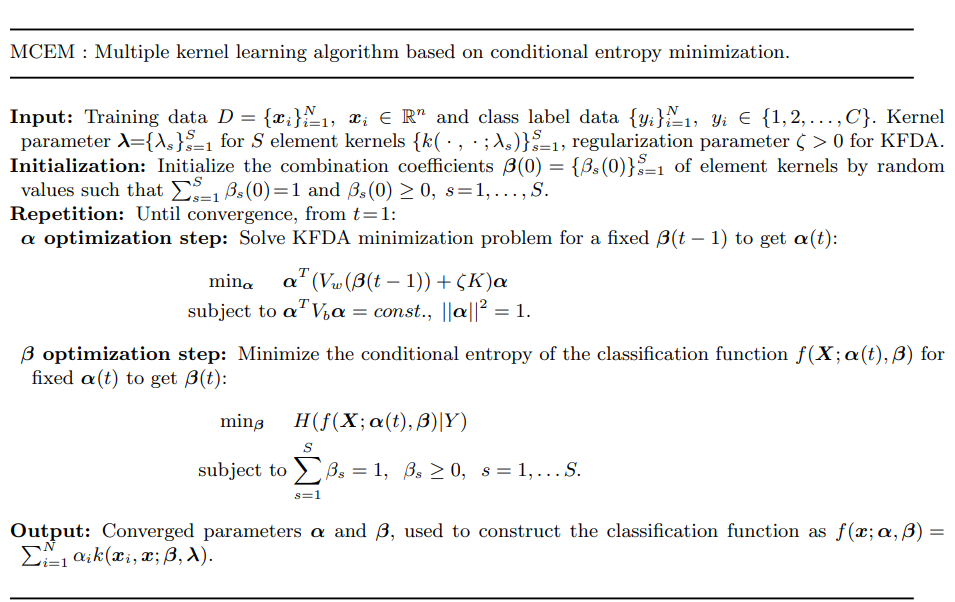

The same paper as above, also proposes another method of dimensionality reduction: MCEM or Multiple kernel learning algorithm based on conditional entropy minimization.

The MCEM algorithm is represented in teh image above. THe above technique extends dimensionality reduction using class conditional entropy to non-linear data. It suggests that we combine multiple kernel functions with a coefficient vector

5. Conclusion

This review only studies a few techniques for dimensionality reduction. There are several other techiques that have been studied in the past. In 2014, Plan et al. studied Dimension Reduction by random hyperplane tessellations. In 2012, Lee et al. published his work and review of graph-based dimension reduction techniques. Neighbourhood components analysis, suggested by Goldberger et al. in 2004 uses Mahalanobis distance measure for dimensionality reduction.

6. References

- Hino, Hideitsu & Murata, Noboru. (2010). A Conditional Entropy Minimization Criterion for Dimensionality Reduction and Multiple Kernel Learning. Neural computation. 22. 2887-923. 10.1162/NECO_a_00027.

- Plan, Yaniv & Vershynin, Roman. (2011). Dimension Reduction by Random Hyperplane Tessellations. Discrete and Computational Geometry. 51. 10.1007/s00454-013-9561-6

- Lee, John A. & Verleysen, Michel (2012). Graph-based dimensionality reduction (https://perso.uclouvain.be/michel.verleysen/papers/bookLezoray12jl.pdf)

- Goldberger, Jacob & Roweis, Sam & Hinton, Geoffrey & Salakhutdinov, Ruslan. (2004). Neighbourhood Components Analysis.. in Advances in Neural Information Processing Systems (NIPS 2004) Vancouver Canada: Dec.. 17.

- Principal Component Analysis (https://online.stat.psu.edu/stat505/lesson/11)

- Factor Analysis (https://online.stat.psu.edu/stat505/lesson/12)