Spiking Neural Network : Towards Brain-inspired Computing

Introduction

Since the AlexNet, Deep Learning (DL) has made great progress in lots of areas. The success of connectionism makes us increasingly try to get inspiration from the brain. However, the working mode and principle of artificial neural network (ANN) in DL has been far from those of neurons in the brain. The differences can be summarized as follows:

- In deep learning, the artificial neurons communicate each other using analog signals. But the neurons in brain using eletric spike.

- The back propagation (BP) gives excellent performance to deep learning. However, so far there is no evidence that the BP mechanism exists in the biological brain.

- In deep learning, a large number of labeled data and training are of great importance. However, for biological brain, we didn't need so much data when we learn to classfy cats and dogs.

- When inference, a huge amount of energy is needed by deep learning. However, the power of a human brain is only equivalent to a 20W light bulb.

To make artificial intelligence more powerful, researchers try to get inspired from the biological brain. So here comes the Spiking Neural Network (SNN). In SNN, neurons imitate the behavior of neurons in the brain and fire spikes to transmit information. Some researchers believe that SNN is the third generation neural network developed after the existing MLP based second generation neural network (ANN). However, the current SNN still has its limitations. Let's start.

What is SNN? A brief view

The basic conception

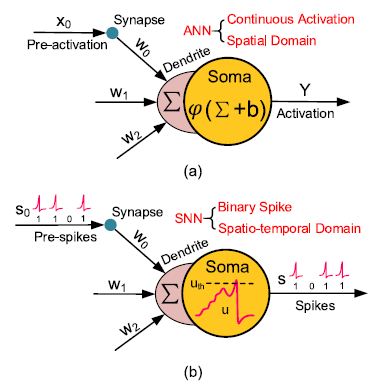

Spiking neural network is evolved from the potential action characteristics of biological neurons. Inspired by brain circuits, each neuron in an SNN model updates the membrane potential based on its memorized state and current inputs, and fires a spike when the membrane potential crosses a threshold. The spiking neurons communicate with each other using binary spike events rather than continuous activations in ANN. It is particularly noteworthy that SNN introduces a spatiotemporal mechanism, and only special neurons such as RNN and LSTM can introduce the time dimension in ANN [1]. The binary spike communication mode and rich spatiotemporal dynamics of SNN constitute the most basic difference from the current ANN [2].

![image]() Basic neuron model in (a) ANN and (b) SNN. Image source

Basic neuron model in (a) ANN and (b) SNN. Image source

Basic neuron model in (a) ANN and (b) SNN. Image source

Basic neuron model in (a) ANN and (b) SNN. Image sourceThe spike neuron models

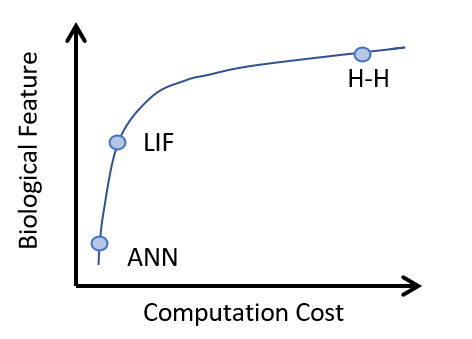

The first model of SNN, the Hodgkin-Huxley model (H-H model), is proposed by Alan Hodgkin and Andrew Huxley in 1952 [3]. The model explains the mechanism of action potentials of biological neurons. However, the computational cost of H-H neurons is very large, which is difficult to accept for computational simulation.

Just as we learn wings from birds, but not feathers. The researchers simplified the simulation of biological neurons, and initialization and propagation of action potentials were preserved. Nowadays in the SNN community, leaky integrate and fire (LIF) neurons are widely used for computing. LIF model achieves a balance between preserving biological neuron characteristics and simplifying computation. In addition, there are a series of neuronal models, such as Izhikevich model, Hindmarsh–Rose model and Morris–Lecar model, which trade off biological characteristics and computational costs to different degrees.

![image]()

How does SNN works? The training of SNN

Now you are facing one of the truely troubles of SNN! Although SNN aims at preserving more biological characterisitics, this also means our old friend in deep learning, BP mechanism, is hard to be applied. The current training methods could be divided into three categories: direct training, ANN2SNN conversion and approximate BP-based deep learning.

Direct training

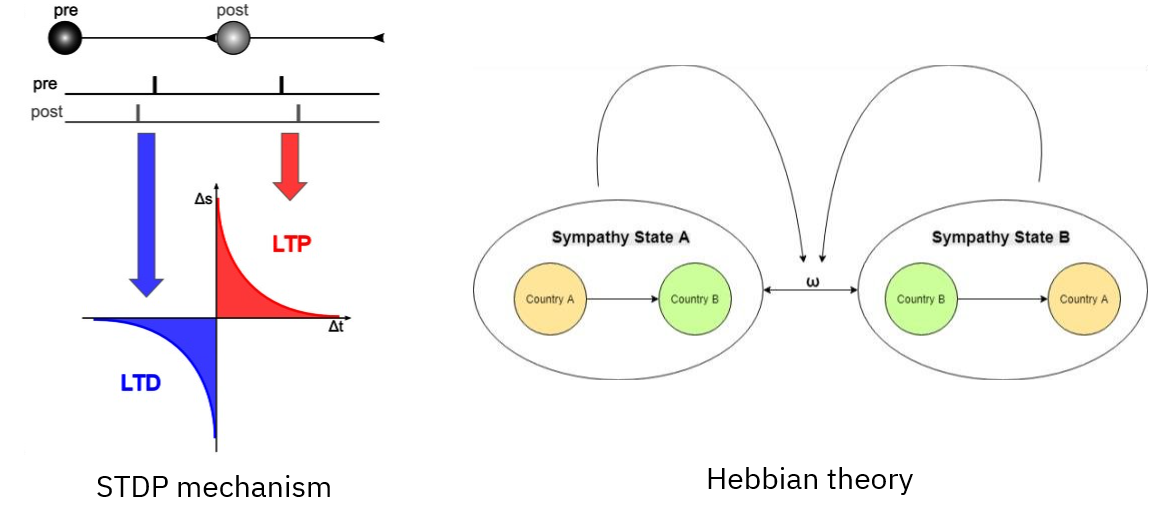

The direct training methods, such as spike timing dependent plasticity (STDP) and Hebbian theory, come from the conceptual modeling of biological mechanisms [4]. During STDP, if the input spike of a neuron tends to appear immediately before the output spike of the neuron on average, the specific input will become stronger. If, on average, the input spike tends to appear immediately after the output spike, the specific input will be slightly weaker. As for Hebbian, a widely recognized summary is "Cells that fire together wire together." These learning mechanisms, which can explain biological concepts, have many praiseworthy advantages except that neural networks trained with these learning methods perform poorly.

![image]()

Helps from deep learning

In order to improve the performance, researchers have explored other non biological interpretable methods. Since the activation function Relu could be simulated by rate coding spikes, an ANN model with Relu could be converted into a SNN model [5]. However, in order to approximate continuous values, the time step will be very long.

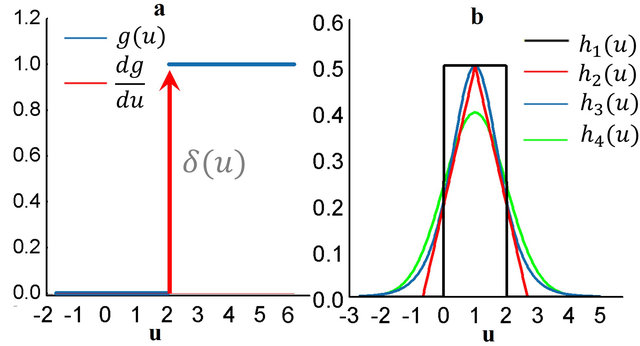

Another idea is to improve SNN, such as approxiamate spike, so that BP can be applied. The reason why BP is difficult to apply is that binary spike is non differentiable. So that, what about just use an approxiamate differentiable spike? When we approximate the derivative of spike activity, the BP could be used and it's convenient thanks to the automatic derivation of Pytorch. The deep learning based training methods achieve a balance between ease of use and performance [6].

![original image]() Derivative approximation of the non-differentiable spike activity. (a) Step activation function. (b) Several typical curves to approximate the derivative of spike activity. Image source

Derivative approximation of the non-differentiable spike activity. (a) Step activation function. (b) Several typical curves to approximate the derivative of spike activity. Image source

Derivative approximation of the non-differentiable spike activity. (a) Step activation function. (b) Several typical curves to approximate the derivative of spike activity. Image source

Derivative approximation of the non-differentiable spike activity. (a) Step activation function. (b) Several typical curves to approximate the derivative of spike activity. Image sourceWhy choose SNN? Discussion of advantages and disadvantages

Advantages

There are several advantages of SNN, including:

- The rich spatial-temporal feature. SNN has a time dimension, so it is conducive to handling dynamic tasks such as SLAM, speech and dynamic vision tasks.

- Hardware friendly. Since the neurons communicate with each other by binary spikes, the multiplication operation degenerates into addition operation. For example, in ANN model, a feedforward of neurons could be write as :

in which is the output, is the synaptic weight and is the input. However, in SNN model, here are binary spikes, so that in one case, the feedforward could be transfered into: As we all know, the addition operation is much more hardware friendly than the multiplication. - Energy conservation. Only neurons whose membrane potential reaches the threshold will work and issue spikes, so only a small part of neurons in the SNN model work at any time. Therefore, the energy consumption is low. But in ANN models, all the neurons work together at anytime. Imagine that if neurons in your brain work like ANN all the time, then you can eat and drink all day without gaining weight.

Disadvantages

Unfortunately, there are many restrictions that make it difficult to exert the advantages of SNN.

First, although the performance of SNN is effectively improved with the help of deep learning, it is still difficult for SNN models to surpass or even achieve the same performance as ANN models. This is a fatal problem, which means that we have to make a trade off bettween other advantages of SNN and the pool model performance.

Then let's see what we get after paying the price of performance. The multiplication degenerates into addition in SNN sounds good. However, it cannot be implemented on the most widely used AI computing platform GPU. GPU can only simulate this mechanism. To really achieve this operation, a specially designed brain-inspired chip is required [7].

As for the energy conservation, size effect is the key factor. The larger the neural network model is, the more energy conservation could be achieve. However, as we mentioned above, the training of SNN is a truely problem, especially when we facing a large scale SNN. The lager the SNN model is, the harder training we need to face.

Write behind

Finally, SNN has rich spatiotemporal dynamics, but this also makes the behavior of SNN models more difficult to interpret. SNN preserves more biological characteristics, but there is still an extremely long way from simulating a brain. The brain-inspired computing is still growing and full of unknowns. We should be down-to-earth and apply the advantages of SNN from practical applications, which is the healthy development path of brain like computing.

Reference

[1] He, Weihua, et al. "Comparing SNNs and RNNs on neuromorphic vision datasets: Similarities and differences." Neural Networks 132 (2020): 108-120.

[2] Deng, Lei, et al. "Rethinking the performance comparison between SNNS and ANNS." Neural networks 121 (2020): 294-307.

[3] Hodgkin, Alan L., and Andrew F. Huxley. "A quantitative description of membrane current and its application to conduction and excitation in nerve." The Journal of physiology 117.4 (1952): 500.

[4] Ghosh-Dastidar, Samanwoy, and Hojjat Adeli. "Spiking neural networks." International journal of neural systems 19.04 (2009): 295-308.

[5] Cao, Yongqiang, Yang Chen, and Deepak Khosla. "Spiking deep convolutional neural networks for energy-efficient object recognition." International Journal of Computer Vision 113.1 (2015): 54-66.

[6] Wu, Yujie, et al. "Spatio-temporal backpropagation for training high-performance spiking neural networks." Frontiers in neuroscience 12 (2018): 331.

[7] Pei, Jing, et al. "Towards artificial general intelligence with hybrid Tianjic chip architecture." Nature 572.7767 (2019): 106-111.