Approximation Algorithms: Introduction to Network Design

This is the lecture notes from Chandra Chekuri's CS583 course on Approximation Algorithms. Chapter 10: Introduction to Network Design.

You can read Chapter 11: Steiner Forest Problem, here. Chapter 9: Clustering and Facility Location, here.

Chapter 10

(Parts of this chapter are based on previous scribed lecture notes by Nitish Korula and Sungjin Im.)

Network Design is a broad topic that deals with finding a subgraph

Connectivity problems are a large part of network design. As we already saw MST is the most basic one and can be solved in polynomial time. The Steiner Tree problem is a generalization where we are given a subset

Graph theory plays an important role in most network algorithmic questions. The complexity and nature of the problems vary substantially based on whether the graph is undirected or directed. To illustrate this consider the Directed Steiner Tree problem. Here

This chapter will focus on two basic problems which are extensively studied and describe some simple approximation algorithms for them. Pointers are provided for sophisticated results including some very recent ones.

10.1 The Steiner Tree Problem

In the Steiner Tree problem, the input is a graph

The Steiner Tree problem is NP-Hard, and also APX-Hard [6]. The latter means that there is a constant

Remark 10.1. If

Remark 10.2. There is

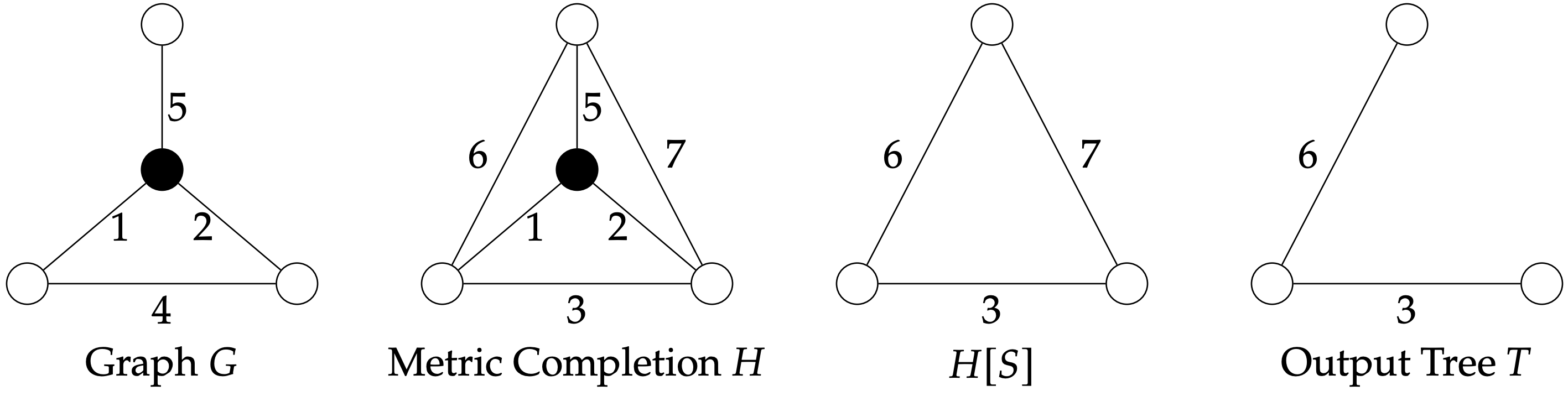

Definition 10.1. Given a connected graph

Figure 10.1: On the left, a graph. On the right, its metric completion, with new edges and modified edge costs in red.

Observation 10.2. To solve the Steiner Tree problem on a graph

We now look at two approximation algorithms for the Steiner Tree problem.

10.1.1 The MST Algorithm

The following algorithm, with an approximation ratio of

| Let |

| Let |

| Output |

(Here, we use the notation

The following lemma is central to the analysis of the algorithm SteinerMST.

Lemma 10.1. For any instance I of Steiner Tree , let

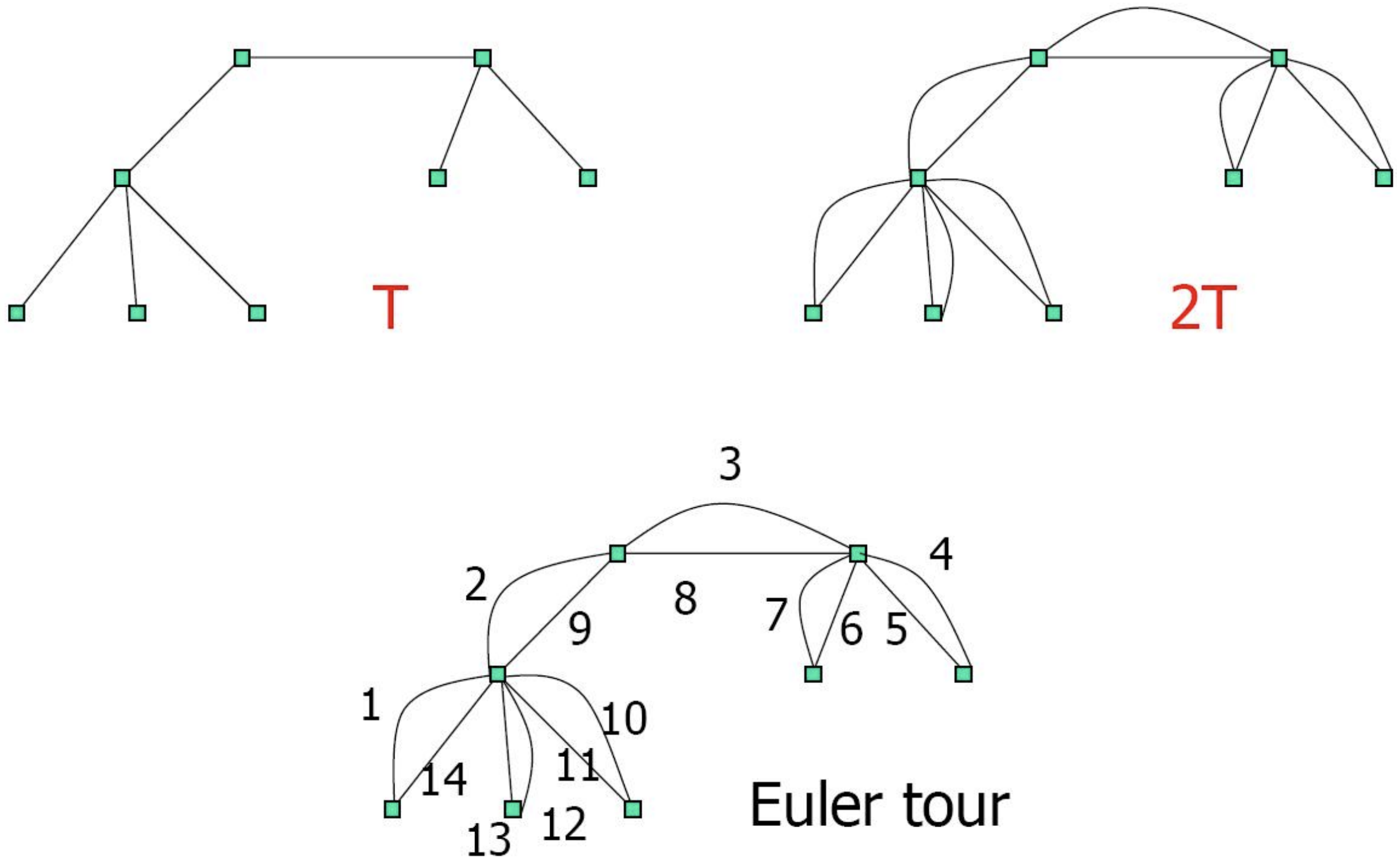

Figure 10.2: Illustrating the MST Heuristic for Steiner Tree

Before we prove the lemma, we note that if there exists some spanning tree in

Proof. Proof of Lemma 10.1 Let

Figure 10.3: Doubling edges of

It is easy to see that

A tight example: The following example (Fig. 4 below) shows that this analysis is tight; there are instances of Steiner Tree where the SteinerMST algorithm finds a tree of

Figure 10.4: A tight example for the SteinerMST algorithm

10.1.2 The Greedy/Online Algorithm

We now describe another simple algorithm for the Steiner Tree problem, due to [10].

| Let |

| Let |

| For |

GreedySteiner is a

Theorem 10.3. The algorithm GreedySteiner has an approximation ratio of

Note that this is an online algorithm; terminals are considered in an arbitrary order, and when a terminal is considered, it is immediately connected to the existing tree. Thus, even if the algorithm could not see the entire input at once, but instead terminals were revealed one at a time and the algorithm had to produce a Steiner tree at each stage, the algorithm GreedySteiner outputs a tree of cost no more than

To prove Theorem 10.3, we introduce some notation. Let

Claim 10.1.1. For all

Proof. Suppose by way of contradiction this were not true; since

We argue that no two terminals in

Therefore, the minimum distance between any two terminals in

Given this claim, it is easy to prove Theorem 10.3.

Question 10.2. Give an example of a graph and an ordering of terminals such that the output of the Greedy algorithm is

Remark 10.3. We emphasize again that the analysis above holds for every ordering of the terminals. A natural variant might be to adaptively order the terminals so that in each iteration

10.1.3 LP Relaxation

A natural LP relaxation for the Steiner Tree problem is the following. For each edge

Note that the preceding LP has an exponential number of constraints. However, there is a polynomial-time separation oracle. Given

10.1.4 Other Results on Steiner Trees

The 2-approximation algorithm using the MST Heuristic is not the best approximation algorithm for the Steiner Tree problem currently known. Some other results on this problem are listed below.

-

The first algorithm to obtain a ratio of better than

was due to due to Alexander Zelikovsky [11]; the approximation ratio of this algorithm was . This was improved to [12] and is based on a local search based improvement starting with the MST heuristic, and follows the original approach of Zelikovsky. -

Byrka et al gave an algorithm with an approximation ratio of

[13] which is currently the best known for this problem. This was originally based on a combination of techniques and subsequently there is an LP based proof [14] that achieves the same approximation for the so-called Hypergraphic LP relaxation. -

The bidirected cut LP relaxation for the Steiner Tree was proposed by [15]; it has an integrality gap of at most

, but it is conjectured that the gap is smaller. No algorithm is currently known that exploits this LP relaxation to obtain an approximation ratio better than that of the SteinerMST algorithm. Though the true integrality gap is not known, there are examples that show it is at least [16]. -

For many applications, the vertices can be modeled as points on the plane, where the distance between them is simply the Euclidean distance. The MST-based algorithm performs fairly well on such instances; it has an approximation ratio of

[17]. An example which achieves this bound is three points at the corners of an equilateral triangle, say of side-length 1; the MST heuristic outputs a tree of cost 2 (two sides of the triangle) while the optimum solution is to connect the three points to a Steiner vertex which is the circumcenter of the triangle. One can do better still for instances in the plane (or in any Euclidean space of small-dimensions); for any , there is a -approximation algorithm that runs in polynomial time [18]. Such an approximation scheme is also known for planar graphs [19] and more generally bounded-genus graphs.

10.2 The Traveling Salesperson Problem (TSP)

10.2.1 TSP in Undirected Graphs

In the Traveling Salesperson Problem (TSP), we are given an undirected graph

TSP is known to be NP-Hard. Moreover, we cannot hope to find a good approximation algorithm for it unless

Theorem 10.4 ([20]). Let

Proof. For the sake of contradiction, suppose we have an approximation algorithm

We observe that if

Since we cannot even approximate the general TSP problem, we consider more tractable variants.

-

Metric-TSP: In Metric-TSP, the instance is a complete graph

with cost on , where satisfies the triangle inequality, i.e. for any . -

TSP-R: TSP with repetitions of vertices allowed. The input is a graph

with non-negative edge costs as in TSP. Now we seek a minimum-cost walk that visits each vertex at least once and returns to the starting vertex.

Exercise 10.1. Show that an

We focus on Metric-TSP for the rest of this section. We first consider a natural greedy approach, the Nearest Neighbor Heuristic (NNH).

| Start at an arbitrary vertex |

| While (there are unvisited vertices) |

| Return to |

Exercise 10.2. 1.Prove that NNH is an

- NNH is not an

(1)-approximation algorithm; can you find an example to show this? In fact one can show a lower bound of on the approximation-ratio achieved by NNH.

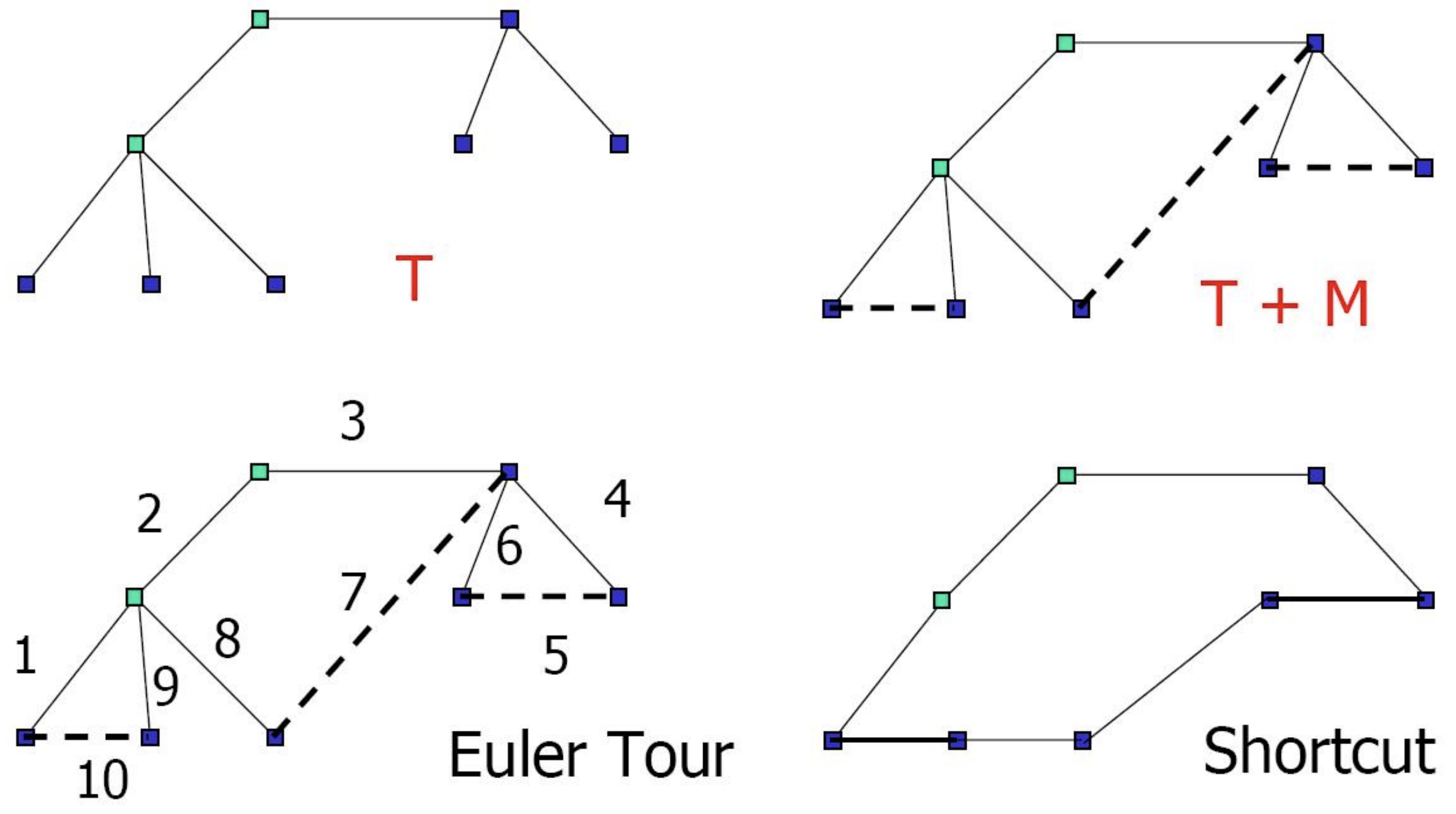

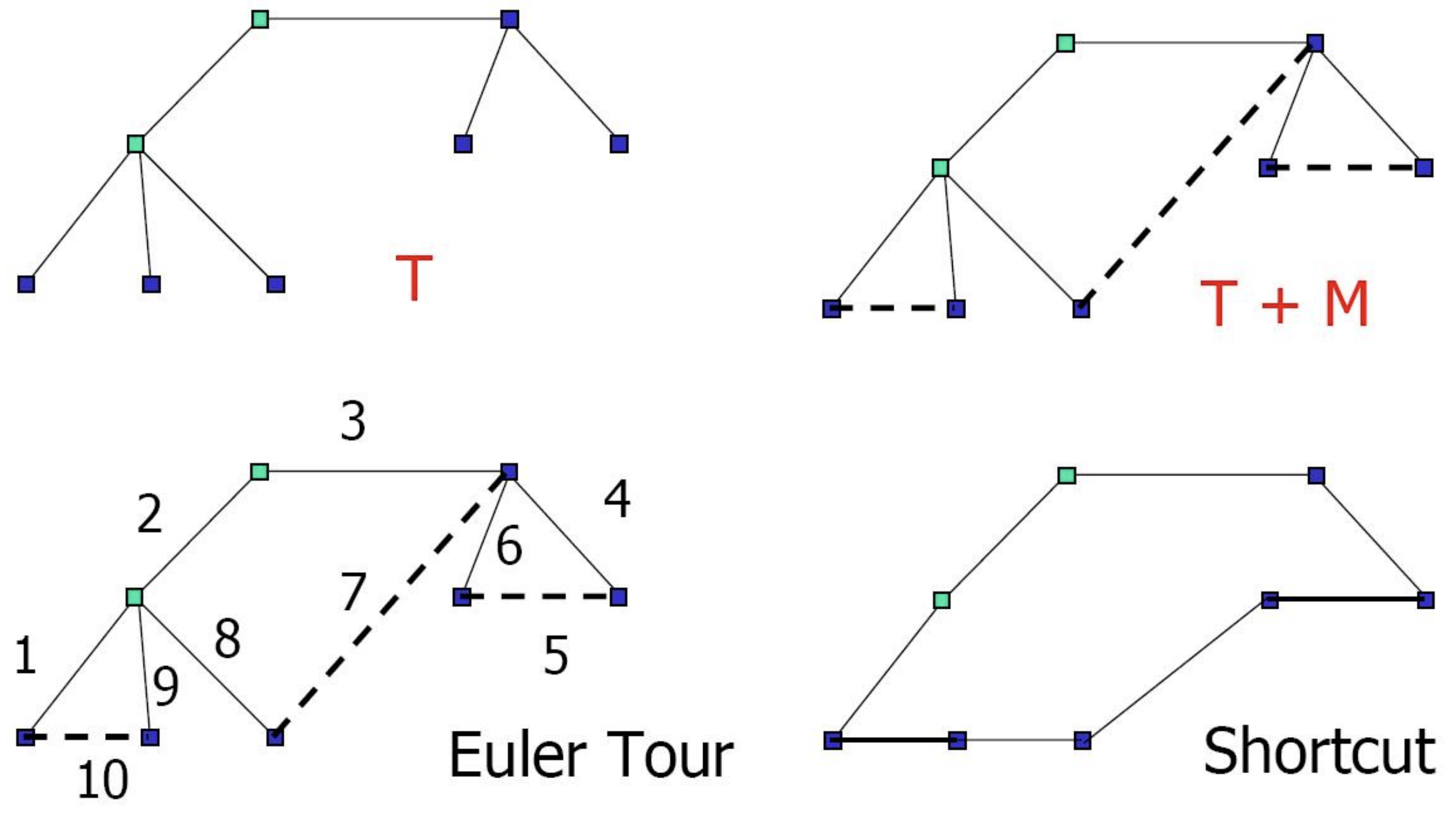

There are constant-factor approximation algorithms for TSP; we now consider an MST-based algorithm. See Fig 10.5.

| Compute an MST |

| Obtain an Eulerian graph |

| An Eulerian tour of |

| Obtain a Hamiltonian cycle by shortcutting the tour. |

Figure 10.5: MST Based Heuristic

Theorem 10.5. MST heuristic(TSP-MST) is a 2-approximation algorithm.

Proof. We have

We observe that the loss of a factor 2 in the approximation ratio is due to doubling edges; we did this in order to obtain an Eulerian tour. But any graph in which all vertices have even degree is Eulerian, so one can still get an Eulerian tour by adding edges only between odd degree vertices in

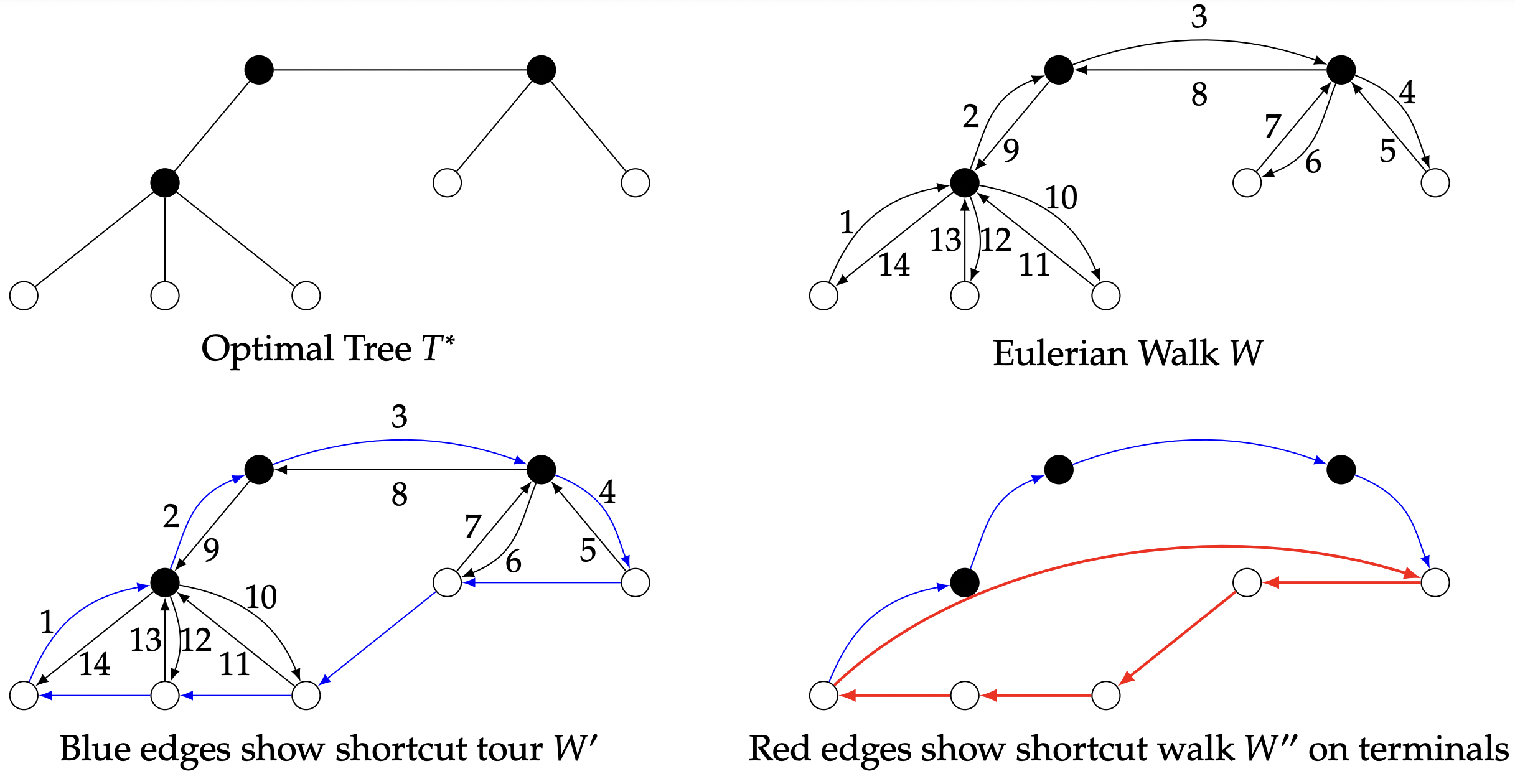

Figure 10.6: Christofides Heuristic

| Compute an MST |

| Let |

| Find a minimum cost matching |

| Add |

| Compute an Eulerian tour of |

| Obtain a Hamilton cycle by shortcutting the tour. |

Theorem 10.6. Christofides Heuristic is a 1.5-approximation algorithm.

Proof. The main part of the proof is to show that

Let

10.2.2 LP Relaxation

We describe a well-known LP relaxation for TSP called the Subtour-Elimination LP and sometimes also called the Held-Karp LP relaxation although the formulation was first given by Dantzig, Fulkerson and Johnson [22]. The LP relaxation has a variable

The relaxation is not useful for a general graph since we saw that TSP is not approximable. To obtain a relaxation for Metric-TSP we apply the above to the metric completion of the graph

Another alternative is to consider the following LP which view the problem as finding a connected Eulerian multi-graph of the underlying graph

Wolsey showed that the

Remarks

-

In practice, local search heuristics are widely used and they perform extremely well. A popular heuristic 2-Opt is to swap pairs from

to or , if it improves the tour. -

It was a major open problem to improve the approximation ratio of

for Metric-TSP; it is conjectured that the Held-Karp LP relaxation [23] gives a ratio of . In a breakthrough Oveis-Gharan, Saberi and Singh [24] obtained a approximation for some small but fixed for the important special case where for each edge (called Graphic-TSP). Very recently the ratio was finally broken for the general case [25].

10.2.3 TSP in Directed Graphs

In this subsection, we consider TSP in directed graphs. As in undirected TSP, we need to relax the problem conditions to get any positive result. Again, allowing each vertex to be visited multiple times is equivalent to imposing the asymmetric triangle inequality

The MST-based heuristic for the undirected case has no meaningful generalization to the directed setting This is because costs on edges are not symmetric. Hence, we need another approach. The Cycle Shrinking Algorithm repeatedly finds a min-cost cycle cover and shrinks cycles, combining the cycle covers found. Recall that a cycle cover is a collection of disjoint cycles covering all vertices. It is known that finding a minimum-cost cycle cover can be done in polynomial time (see Homework 0). The Cycle Shrinking Algorithm achieves a

Figure 10.7: A directed graph and a valid Hamiltonian walk

| Transform |

| If |

| Find a minimum cost cycle cover with cycles |

| From each |

| Recursively solve problem on |

| Shortcut |

For a snapshot of the Cycle Shrinking Algorithm, see Fig 10.8.

Figure 10.8: A snapshot of Cycle Shrinking Algorithm. To the left, a cycle cover

Lemma 10.3. Let the cost of edges in

Proof. Since

Lemma 10.4. The cost of a min-cost cycle-cover is at most the cost of an optimal TSP tour.

Proof. An optimal TSP tour is a cycle cover.

Theorem 10.7. The Cycle Shrinking Algorithm is a

Proof. We prove the above by induction on

The algorithm outputs

10.2.4 LP Relaxation

The LP relaxation for ATSP is given below. For each arc

Remarks:

-

It has remained an open problem for more than 25 years whether there exists a constant factor approximation for ATSP. Asadpour et al [26] have obtained an

-approximation for ATSP using some very novel ideas and a well-known LP relaxation.

- Eran Halperin and Robert Krauthgamer. “Polylogarithmic inapproximability”. In: Proceedings of the thirty-fifth annual ACM symposium on Theory of computing. 2003, pp. 585–594. ↩︎

- Moses Charikar, Chandra Chekuri, To-Yat Cheung, Zuo Dai, Ashish Goel, Sudipto Guha, and Ming Li. “Approximation algorithms for directed Steiner problems”. In: Journal of Algorithms 33.1 (1999), pp. 73–91. ↩︎

- Anupam Gupta and Jochen Könemann. “Approximation algorithms for network design: A survey”. In: Surveys in Operations Research and Management Science 16.1 (2011), pp. 3–20. ↩︎

- Guy Kortsarz and Zeev Nutov. “Approximating minimum cost connectivity problems”. In: Parameterized complexity and approximation algorithms. Ed. by Erik D. Demaine, MohammadTaghi Hajiaghayi, and Dániel Marx. Dagstuhl Seminar Proceedings 09511. Dagstuhl, Germany: Schloss Dagstuhl - Leibniz-Zentrum fuer Informatik, Germany, 2010. url: http://drops.dagstuhl.de/opus/volltexte/2010/2497. ↩︎

- Zeev Nutov. “Node-connectivity survivable network problems”. In: Handbook of Approximation Algorithms and Metaheuristics. Chapman and Hall/CRC, 2018, pp. 233–253. ↩︎

- Marshall Bern and Paul Plassmann. “The Steiner problem with edge lengths 1 and 2”. In: Information Processing Letters 32.4 (1989), pp. 171–176. ↩︎

- Miroslav Chlebık and Janka Chlebıková. “Approximation hardness of the Steiner tree problem on graphs”. In: Scandinavian Workshop on Algorithm Theory. Springer. 2002, pp. 170–179. ↩︎

- Variants of the Steiner Tree problem, named after Jakob Steiner, have been studied by Fermat, Weber, and others for centuries. The front cover of the course textbook contains a reproduction of a letter from Gauss to Schumacher on a Steiner tree question. ↩︎

- Hiromitsu Takahashi and A Matsuyama. “An approximate solution for the Steiner problem in graphs”. In: Math. Jap. 24.6 (1980), pp. 573–577. ↩︎

- Makoto Imase and Bernard M Waxman. “Dynamic Steiner tree problem”. In: SIAM Journal on Discrete Mathematics 4.3 (1991), pp. 369–384. ↩︎

- Alexander Z Zelikovsky. “An 11/6-approximation algorithm for the network Steiner problem”. In: Algorithmica 9.5 (1993), pp. 463–470. ↩︎

- Gabriel Robins and Alexander Zelikovsky. “Tighter bounds for graph Steiner tree approximation”. In: SIAM Journal on Discrete Mathematics 19.1 (2005), pp. 122–134 ↩︎

- Jaroslaw Byrka, Fabrizio Grandoni, Thomas Rothvoß, and Laura Sanita. “An improved LP-based approximation for Steiner tree”. In: Proceedings of the forty-second ACM symposium on Theory of computing. 2010, pp. 583–592. ↩︎

- Michel X Goemans, Neil Olver, Thomas Rothvoß, and Rico Zenklusen. “Matroids and integrality gaps for hypergraphic steiner tree relaxations”. In: Proceedings of the forty-fourth annual ACM symposium on Theory of computing. 2012, pp. 1161–1176. ↩︎

- Jack Edmonds. “Optimum branchings”. In: Journal of Research of the National Bureau of Standards, B 71 (1967), pp. 233–240. ↩︎

- Robert Vicari. “Simplex based Steiner tree instances yield large integrality gaps for the bidirected cut relaxation”. In: arXiv preprint arXiv:2002.07912 (2020). ↩︎

- D-Z Du and Frank K. Hwang. “A proof of the Gilbert-Pollak conjecture on the Steiner ratio”. In: Algorithmica 7.1 (1992), pp. 121–135. ↩︎

- Sanjeev Arora. “Polynomial time approximation schemes for Euclidean traveling salesman and other geometric problems”. In: Journal of the ACM (JACM) 45.5 (1998), pp. 753–782. ↩︎

- Glencora Borradaile, Philip Klein, and Claire Mathieu. “An

approximation scheme for Steiner tree in planar graphs”. In: ACM Transactions on Algorithms (TALG) 5.3 (2009), pp. 1–31. ↩︎ - Sartaj Sahni and Teofilo Gonzalez. “P-complete approximation problems”. In: Journal of the ACM (JACM) 23.3 (1976), pp. 555–565. ↩︎

- Nicos Christofides. Worst-case analysis of a new heuristic for the travelling salesman problem. Tech. rep. Carnegie-Mellon Univ Pittsburgh Pa Management Sciences Research Group, 1976. ↩︎

- George Dantzig, Ray Fulkerson, and Selmer Johnson. “Solution of a largescale traveling-salesman problem”. In: Journal of the operations research society of America 2.4 (1954), pp. 393–410. ↩︎

- Michael Held and Richard M Karp. “The traveling-salesman problem and minimum spanning trees”. In: Operations Research 18.6 (1970), pp. 1138–1162. ↩︎

- Shayan Oveis Gharan, Amin Saberi, and Mohit Singh. “A randomized rounding approach to the traveling salesman problem”. In: 2011 IEEE 52nd Annual Symposium on Foundations of Computer Science. IEEE. 2011, pp. 550–559. ↩︎

- Anna R Karlin, Nathan Klein, and Shayan Oveis Gharan. “A (slightly) improved approximation algorithm for metric TSP”. In: Proceedings of the 53rd Annual ACM SIGACT Symposium on Theory of Computing. 2021, pp. 32–45. ↩︎

- Arash Asadpour, Michel X Goemans, Aleksander M ˛adry, Shayan Oveis Gharan, and Amin Saberi. “An O (log n/log log n)-approximation algorithm for the asymmetric traveling salesman problem”. In: Operations Research 65.4 (2017). Preliminary version in Proc. of ACM-SIAM SODA, 2010., pp. 1043–1061. ↩︎

- Ola Svensson. “Approximating ATSP by relaxing connectivity”. In: 2015 IEEE 56th Annual Symposium on Foundations of Computer Science. IEEE. 2015, pp. 1–19. ↩︎

- Ola Svensson, Jakub Tarnawski, and László A Végh. “A constant-factor approximation algorithm for the asymmetric traveling salesman problem”. In: Journal of the ACM (JACM) 67.6 (2020), pp. 1–53. ↩︎

- Vera Traub and Jens Vygen. “An improved approximation algorithm for ATSP”. In: Proceedings of the 52nd Annual ACM SIGACT Symposium on Theory of Computing. 2020, pp. 1–13. ↩︎

Recommended for you

Using Mathpix and NaviLens to create accessible math flashcards

Using Mathpix and NaviLens to create accessible math flashcards

Students with print disabilities, due to blindness, low vision, learning disabilities or physical disabilities, can greatly benefit from accessible math flashcards and tutorials. Mathpix greatly reduces the amount of work required to create these by capturing text and math from a variety of sources making them ready for conversion to print or braille.