Introduction to Social Dynamics through Tree Surgery

Introduction

How do social norms emerge, and how do people choose to conform or rebel against them? In this tutorial, we will predict the emergence of social norms in simulated societies. I will also introduce an algorithmic solution with an implementation in Python[1].

Consider a society where every player is facing a binary choice. Whatever the underlying problem is - wearing a facemask outdoors, using a fork in medieval Italy, rebelling against an oppressive government - it is reasonable to assume that the player gets a reinforcement benefit from conforming with the behavior of her neighbors. More masks encourage compliance, more rebels make the rebellion likelier to succeed, etc.

Each period, every player has a chance to revise the decision. This captures the idea that players adapt their behavior to their neighbors over time. They may also behave imperfectly, or, in an alternative interpretation, learn the optimal behavior by trying different things.

This tutorial largely follows the results and the exposition in

The Stag Hunt game

To make this intuition formal, suppose the players live on a

Every period each player (call this player

The payoffs from their choices are determined according to the following table (the first number is the payoff of the row player, the second is the payoff of the column player).

The players maximize the expected payoff - the average payoff from playing the chosen action against every one of the four neighbors. This particular payoff table is called a Stag Hunt in game theory. It captures a similar intuition as the famous Prisoner dilemma: a conflict between safety and cooperation.

In particular, notice that

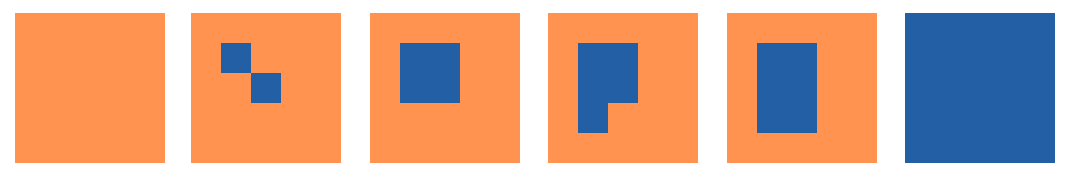

A common approach to such "agent-based" problems with many agents is to run simulations. You can see the result of one such simulation below with colors corresponding to the two actions.

It is clear that at least in this one case

The rest of this tutorial is organized into sections, starting with a simpler model without noise. This model is then used to show that the process always converges. Then we introduce noise and solve the problem using the tree surgery approach. The approach is general, and the present text advocates these tools for agent-based models. However, for practical problems, an algorithmic approach is also of interest. The reader may be inclined to jump to the last section with the Python code.

Simulation

Stable behavior

Much of the social behavior can be captured by the concept of a Markov process. A Markov process means that all the information about what the players are going to do in the next period is contained entirely in what the society is doing now, the state. The state is the current behavior of every agent. The players use the current behavior of the neighbors to revise their own, not considering what happened two, three, or more periods into the past.

First, we consider the problem without noise. We look for stable states, the states that, once entered, will persist indefinitely. The norm will emerge, the players will no longer be switching and will converge to one action. We also need to check for cycles of actions that could persist indefinitely.

Let us call a rectangle of agents that coordinate on the same action (and are surrounded by players with a different action) an enclave.

Consider first

On the other hand, any such enclave of

This state is stable and we can see then that many different configurations are stable.

Below is an embedded javascript browser implementation of the model[2]. It also allows one to change the payoff of

Click to show/hide the interactive model...

Click "setup randomly", then "run simulation". Control the speed with the slider. Use the buttons to manually change actions. Try setting the payoff to below/above/exactly 5 to see the change in behavior.

This evidence of multiple stable states complicates the problem, and two questions remain. First, could the process not converge and oscillate with players changing their behavior indefinitely in a cycle. Second, which of these stable states are more likely?

Let us start by answering the first question negatively.

Theoretical approach

Potential functions (no cycles)

To show that the process always converges, we need to show that we can (weakly) order the states of the process in some way. If from every state we eventually get to another state and can never go back, then the revision process has to eventually stop.

Economists and game theorists are used to thinking of orderings in terms of functions, similarly to how preferences are captured by utility functions. If we can find some value that decreases as the process evolves, we can order the states according to this value. One can think of this as an "energy" that leaves the system, and the evolution of the system stops once this energy reaches zero. The formal term is a potential function.

Let

For this problem such potential function is:

Indeed, suppose a player (let us call this player

The fact that

A usual warning is that

Stochastic stability (introducing noise)

Let us now turn to the original question of which of these many stable states are more likely. Without noise, it is impossible to say, as it depends on the starting conditions. Indeed if we start already in some stable state, then by definition, this state will be repeated in perpetuity.

This situation changes once we add noise. There are many possible ways to add random behavior. The simplest one is to suppose that the optimal action is chosen with probability

Costs and Trees

The tools of the trade were introduced in the 1980s-1990s and have been successfully applied to many interesting problems.

First, the 1-step cost of the process moving from state

adopting the convention that

The cost[3] is the exponential decay rate of the transition probability from

The value of

- •

- impossible transitions; - •

- transitions that do not require noise and can happen with zero noise. For example, states with rectangular -enclaves are not stable, and there are zero-cost transitions from these states; - •Some

- transitions that are only possible with random noise. The less probable is a transition, the higher its cost.

We can extend the definition of cost to paths and multi-step transitions. The overall cost of the process moving from

where

A spanning tree rooted at

Figure 1: Example of a (directed) spanning tree. Every node has exactly one outgoing edge except the root, node 0.

The correct term for a directed spanning tree is an arborescence, but social sciences tend to call them spanning trees.

Now consider the directed graph on the set of states

Tree Surgery

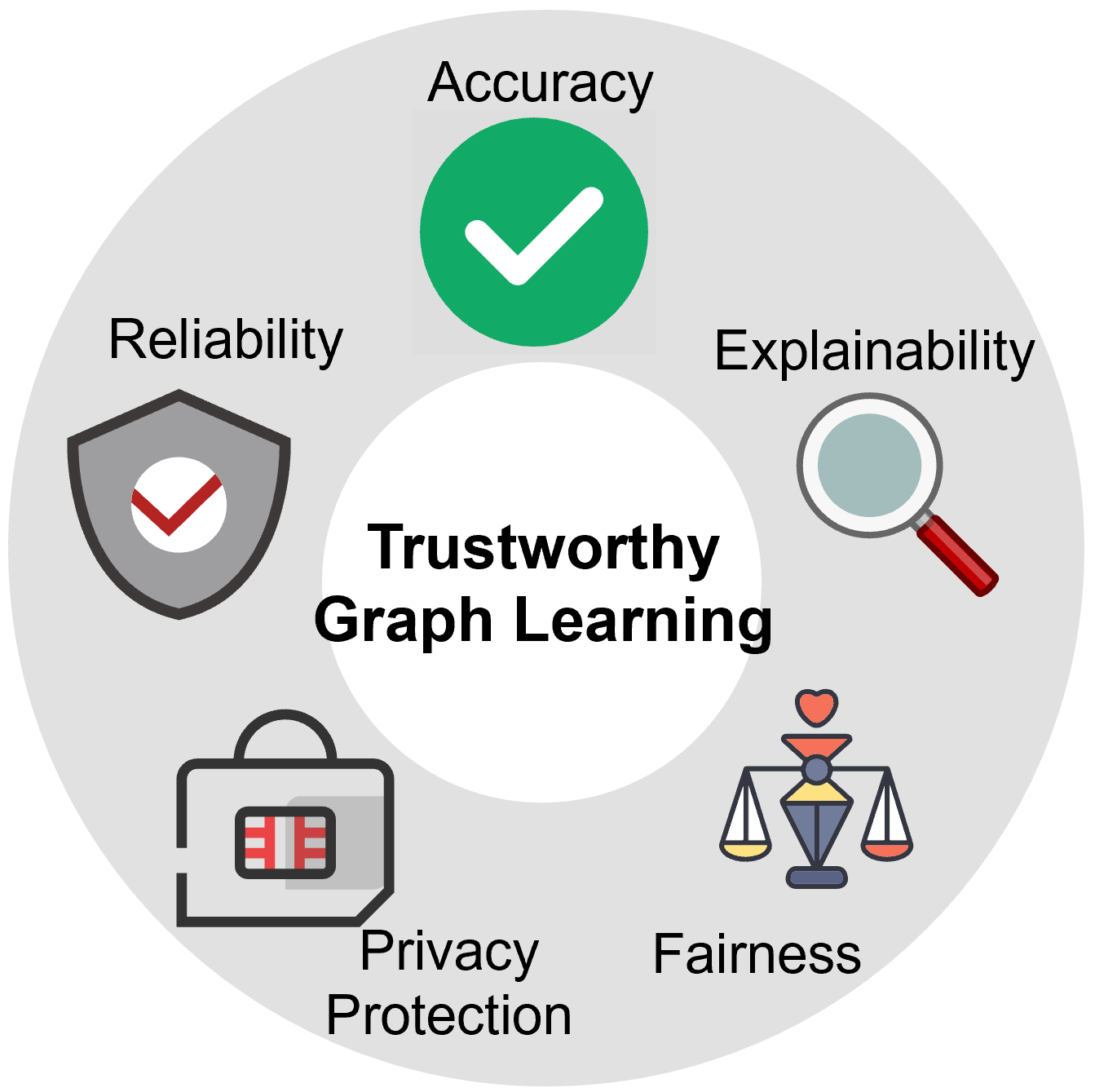

The reason for looking at costs and spanning trees is the following repeatedly rediscovered result

Theorem: A state can occur in the limit with non-vanishing probability if and only if there is a spanning tree rooted in this state whose cost is minimal.

Another important fact is that we do not need to consider all states. Indeed, a good understanding exercise is to count their number:

How many possible states (configurations of the field, not necessarily stable) are there?...

While there are many states, the set of the possible spanning trees is even bigger. Fortunately, we can focus only on the trees defined on the stable states (sometimes called bonsai trees), of which there is a much smaller number. The weights are then given by

We will illustrate the method by applying the theory above to our problem. Let us denote the state where all players play

The most important observation is the following:

There is a path from any state to, such that from every state, this path follows an edge with the lowest possible cost.

This statement is not a theorem but an applied result for our particular grid-world problem. Such statements are the essence of "tree surgery": making a graph-theoretic argument about the shape of minimal spanning trees to narrow down the possible roots that correspond to the social norms in the limit.

Let us sketch the proof of this fact below. Remember that we need only consider the stable states, so the edge is the minimum cost transition to another stable state, possibly involving multiple steps.

Indeed, take any state where some players are playing

The only other case is

This concludes the proof of the result above. The rest of the argument is short. The state

Therefore to leave the "basin of attraction" of

Thus shifting from state

On the other hand, shifting between any other stable states has the cost of at most

Then in any minimal spanning tree, we can safely assume that there is a path to

This argument solves the problem since the process always converges to the root,

Conclusion

We have used tree surgery to show that the inferior social norm will prevail in the long run. Even though

This result confirms what we had suspected from simulations, but the power of the theoretical analysis is that it tells us more. Suppose we change the payoffs to

Then by following the same theoretical analysis, we would discover that

The study of social dynamics with the mathematical apparatus of stochastic stability is an active field of research going through a renaissance

Algorithmic approach

The theorem above reduces the problems of predicting the emergence of norms to the minimum arborescence problem, the problem of finding a minimum directed spanning tree for a given graph. The root of this graph is what will (almost always) eventually happen in society. In other words, we can show that the pattern in the animation shown at the beginning of this tutorial is guaranteed to eventually occur in the limit if we find a corresponding graph-theoretic tree-surgery argument.

There are well-known fast algorithms that automate this problem. A famous approach is the Chu–Liu/Edmonds' algorithm

We will show a simple implementation in Python. Assume 9 players in a

First, we import the libraries.

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

We will need to convert decimal indices of states to their binary representation[5] and back:

def binatodeci(binary):

return sum(val*(2**idx) for idx, val in enumerate(reversed(binary)))

We now construct a graph

# Generate an empty digraph

G = nx.DiGraph()

G.add_nodes_from(range(2**9))

# Add edges by going over all states

for state in range(2**9):

state_bin = np.array([int(i) for i in list('{0:09b}'.format(state))]).reshape(3,3)

# Nine possible transitions, one for each player

for x in range(3):

for y in range(3):

# The new state if the chosen player switches

new_state_bin = np.copy(state_bin)

new_state_bin[x,y]=1-new_state_bin[x,y]

new_state=binatodeci(new_state_bin.flatten().tolist())

# Number of neighbors with action A

n = np.sum([state_bin[(x-1) % 3, (y-1) % 3],

state_bin[(x+1) % 3, (y-1) % 3],

state_bin[(x-1) % 3, (y+1) % 3],

state_bin[(x+1) % 3, (y+1) % 3]])

# Add an edge and a cost depending on

# whether the transition is free or requires noisy behavior

if state_bin[x,y] == 0:

if n*10 >= 4*4:

G.add_edge(new_state,state,weight=0)

else:

G.add_edge(new_state,state,weight=1)

else:

if n*10 <= 4*4:

G.add_edge(new_state,state,weight=0)

else:

G.add_edge(new_state,state,weight=1)

The actual code for solving the problem is just

root_node = [n for n,d in spanning_tree.in_degree() if d==0]

print(np.array([int(i) for i in list('{0:09b}'.format(root_node[0]))]).reshape(3,3))

This code will output the final social norm that the players choose in the limit, assuming it is unique.

Exercise

It is reasonable to generalize from a grid world to a society living on a general graph

M = nx.gnp_random_graph(9,0.5)

nx.draw(M, with_labels=True)

Different graph generators offer a different structure for the problem. A society living on a one-dimensional circle is a very common model. Concluding the tutorial, the proposed exercise is to generalize the code above to predict the behavior of a society living on the graph

References

The code is also available as an iPython notebook on github https://github.com/artdol/spanning_trees ↩︎

A complete editable and downloadable netlogo web implementation of this model is live at http://modelingcommons.org/browse/one_model/6958 ↩︎

This specification is technically a so-called "lenient" cost that helps ensure that the stationary distribution exists. We will gloss over the difference. Please refer to

[7] for a detailed treatment. ↩︎ https://networkx.org/documentation/networkx-1.10/reference/generated/networkx.algorithms.tree.branchings.minimum_spanning_arborescence.html ↩︎

By Cory Kramer @ https://stackoverflow.com/questions/64391524/python-converting-binary-list-to-decimal ↩︎